Google Dynamic Remarketing Ads A Deep Dive

Google Dynamic Remarketing Ads allows businesses to showcase products directly to website visitors who’ve previously expressed interest. This powerful tool, unlike static ads, dynamically adjusts to show the specific products that users have viewed or interacted with on your site. This targeted approach significantly boosts conversion rates, leading to higher ROI compared to traditional remarketing strategies. Understanding the nuances of campaign setup, audience segmentation, and performance tracking is crucial for maximizing the effectiveness of Dynamic Remarketing Ads.

This comprehensive guide explores the intricacies of Google Dynamic Remarketing Ads, from fundamental concepts to advanced strategies. We’ll delve into audience targeting, campaign structure, content optimization, performance measurement, and advanced techniques. Discover how to effectively leverage Dynamic Remarketing to boost your online sales and drive significant business growth.

Introduction to Google Dynamic Remarketing Ads

Dynamic remarketing ads are a powerful tool for businesses to re-engage with website visitors who haven’t completed a desired action, like making a purchase or filling out a form. They work by displaying customized product or service ads to these potential customers across the Google Display Network. This personalized approach significantly increases the chances of conversion, as opposed to generic, one-size-fits-all ads.Google Dynamic Remarketing Ads use data from your website to create highly targeted ads.

Instead of showing generic ads, they show ads for specific products or services that a user previously viewed on your website. This personalized approach leverages the user’s prior browsing history, making the ads more relevant and increasing the likelihood of a conversion.

Core Functionalities of Dynamic Remarketing Ads

Dynamic remarketing ads work by collecting data about user interactions on your website. This data includes products viewed, items added to a cart, pages visited, and other actions. This data is then used to create customized ads that display the specific products or services that the user previously interacted with on your site. The ads are displayed across the Google Display Network, reaching a wider audience.

Benefits of Dynamic Remarketing Ads Compared to Static Ads

Dynamic remarketing ads offer several advantages over static ads. They significantly improve conversion rates by showcasing products that users have already expressed interest in. This personalized approach significantly increases relevance and user engagement, unlike static ads that show generic content. This targeted approach ensures that your ads are more likely to resonate with potential customers, leading to higher conversion rates.

The improved targeting translates into a higher return on investment (ROI).

Key Differences Between Dynamic Remarketing and Other Remarketing Types

Dynamic remarketing differs from other remarketing types in its personalized approach. Standard remarketing often shows generic ads based on past site visits, without specific product details. In contrast, dynamic remarketing ads display tailored ads featuring the exact products a user previously interacted with. This targeted approach is critical in achieving higher conversion rates compared to other remarketing methods.

Comparison Table

| Ad Type | Target Audience | Expected Outcomes |

|---|---|---|

| Static Remarketing | Users who have visited the website before. | Increased brand awareness, website traffic, and potential conversions. |

| Dynamic Remarketing | Users who have shown interest in specific products or services on the website. | Higher conversion rates, increased sales, and improved ROI. |

Target Audience & Segmentation

Dynamic remarketing relies heavily on precisely identifying and segmenting your potential customers. This crucial step allows for highly targeted ad campaigns, optimizing ad spend and improving conversion rates. Understanding your audience’s behavior and preferences empowers you to craft personalized messages that resonate with their needs and desires.Effective segmentation allows for tailored messaging and ad experiences that speak directly to different customer groups.

This is a powerful technique to maximize the return on investment from your dynamic remarketing campaigns.

Identifying Potential Customers

Identifying potential customers for dynamic remarketing involves analyzing various data points. This includes past purchase history, website browsing behavior, and demographic information. Combining these data points offers a holistic view of the customer, allowing you to craft targeted ads.

Customer Segmentation Examples

Different customer segments can be identified based on past purchases, website behavior, and demographics. For example, a customer who frequently purchases high-end electronics might be segmented differently from a customer who primarily browses budget-friendly items. Website behavior, like time spent on a product page or add-to-cart activity, provides additional insights into customer intent. Understanding the frequency and duration of visits to different product pages provides significant insights into potential customer interest.

Creating Tailored Audiences for Product Categories

To create tailored audiences for different product categories, consider these approaches. First, segment customers based on past purchases or website interactions with products within a specific category. Next, consider factors like product type, price point, and customer reviews when creating these audiences. For example, customers who viewed high-end camera lenses might be targeted with ads for related accessories.

Another example would be customers who frequently visited the “sports equipment” section of a website; these customers could be targeted with ads for new running shoes or sports apparel.

Google Dynamic Remarketing Ads are fantastic for re-engaging website visitors, but understanding the technical side is key. To make these ads truly effective, you need to ensure your website is structured correctly. This involves knowing how to implement Schema.org markup, which is crucial for search engines to understand your site’s content properly. A good resource for learning more about Schema.org is need know schema org.

Ultimately, the right Schema.org implementation can significantly boost your Dynamic Remarketing campaigns by improving ad relevance and click-through rates.

Understanding Customer Journey Stages

Understanding customer journey stages is critical in dynamic remarketing. Customers progress through different stages, from initial awareness to purchase and post-purchase engagement. By understanding these stages, you can tailor your messaging at each stage, creating a more effective customer experience. For example, ads highlighting product features could be displayed to customers who are actively researching products. Conversely, post-purchase ads could focus on customer testimonials or offer recommendations for complementary products.

Using Product-Level Targeting in Dynamic Remarketing

Product-level targeting allows for extremely granular control over your ad campaigns. This involves targeting specific products based on various factors, such as product attributes, price, and availability. This method allows you to showcase the exact product a user viewed on your website. For instance, if a user viewed a specific model of laptop, ads showcasing that particular laptop model can be displayed.

Comparing Audience Segmentation Strategies

| Segmentation Strategy | Description | Strengths | Weaknesses |

|---|---|---|---|

| Demographics | Based on age, gender, location, income, etc. | Broad segmentation, readily available data | Less precise targeting, may not capture intent |

| Behavior | Based on website activity, purchase history, browsing patterns, etc. | Highly targeted, captures intent | Requires more data collection and analysis |

| Psychographics | Based on values, interests, lifestyle, etc. | Deep understanding of customer motivations | More challenging to collect and analyze data |

Campaign Structure & Setup

Dynamic remarketing campaigns offer a powerful way to re-engage website visitors and drive conversions. Setting up these campaigns effectively is crucial for maximizing their impact. This involves understanding the steps involved in linking your website, creating product feeds, and optimizing bids and budgets. A well-structured campaign can significantly boost your return on ad spend (ROAS).

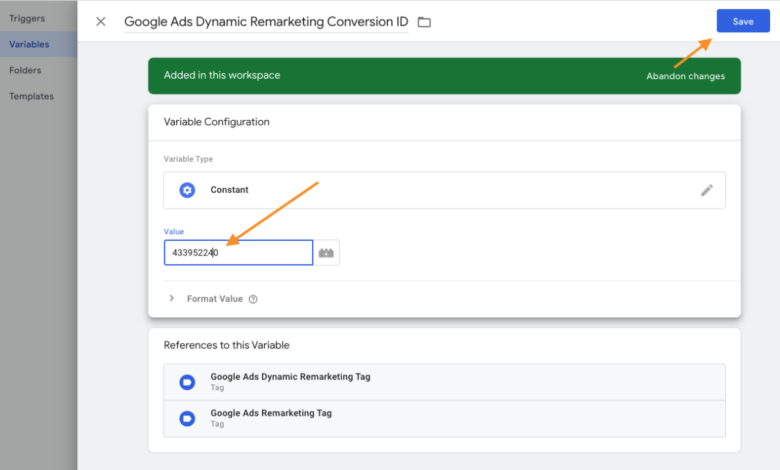

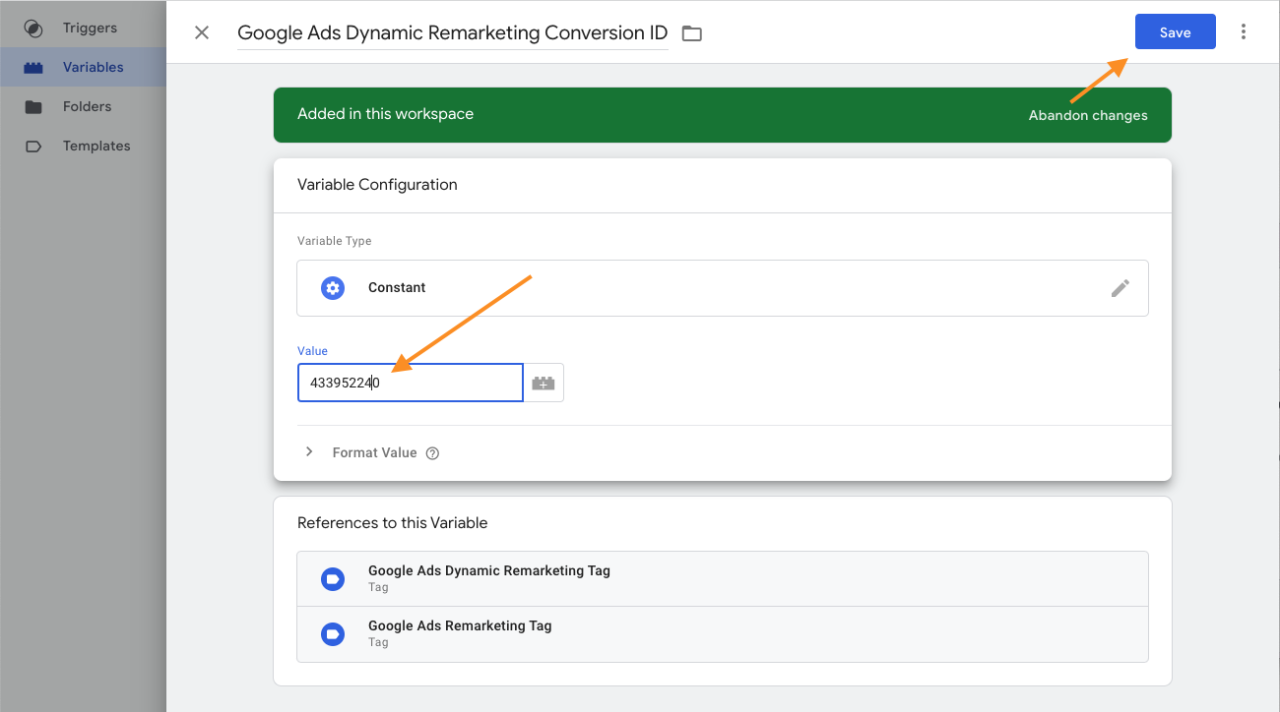

Linking Dynamic Remarketing to Your Website

Connecting your dynamic remarketing campaigns to your website is the first step. This establishes the crucial link between your online presence and the ads targeting your website visitors. This process involves configuring tracking tags on your website to identify users and their interactions with your products. This is essential for identifying specific product views, abandoned carts, and other valuable user data.

Accurate tracking ensures that your ads target the right audience with the right products.

Setting Up Dynamic Product Feeds

Dynamic product feeds are the heart of dynamic remarketing campaigns. These feeds contain detailed information about your products, including images, prices, descriptions, and availability. Creating a comprehensive and accurate product feed is essential for delivering relevant ads to your target audience. These feeds act as a centralized database for your product information. This ensures that ads display the most up-to-date details.

- Data Structure: Your feed should adhere to Google’s specifications for structure and format. This ensures accurate interpretation by Google’s system. The structure should include clear fields for product titles, descriptions, prices, images, and availability.

- Regular Updates: Maintain a regular update schedule to reflect inventory changes and new products. This prevents your ads from showing out-of-stock or outdated information.

- Accuracy is Paramount: Ensure the accuracy of the data in your feed. Inaccurate information can negatively impact your campaign performance and damage your brand reputation.

Optimizing Bids and Budgets for Dynamic Remarketing

Optimizing bids and budgets is critical for maximizing the ROI of your dynamic remarketing campaigns. Dynamic remarketing allows you to tailor bids to different user segments and product types, ensuring that your ads are shown to the most receptive audiences. This personalized approach to bidding can lead to substantial cost savings.

- Bid Strategies: Utilize Google’s automated bidding strategies to optimize your bids in real-time. Automated bidding can adjust your bids dynamically based on performance data.

- Budget Allocation: Allocate your budget strategically across different product categories or target segments. This ensures that you’re investing your budget in the most promising areas.

- Monitor and Adjust: Regularly monitor your campaign performance and adjust your bids and budget as needed. This ensures that your campaign is always performing at its best.

Campaign Setup Steps

| Step | Action |

|---|---|

| 1 | Link your website: Configure tracking tags on your website to identify user interactions. |

| 2 | Create a dynamic product feed: Include details like images, prices, descriptions, and availability. |

| 3 | Set up your campaign: Define your target audience, budget, and bidding strategy. |

| 4 | Review and launch: Carefully review all settings before launching your campaign. |

Content & Creative Optimization

Dynamic remarketing ads rely heavily on compelling visuals and persuasive copy to convert browsing traffic into paying customers. Optimizing your content and creative assets is crucial for maximizing the effectiveness of your dynamic remarketing campaigns. This involves more than just displaying products; it’s about crafting an engaging experience that resonates with your target audience.

Effective Strategies for High-Performing Ads

Effective strategies for creating high-performing dynamic remarketing ads involve understanding your audience, tailoring your messaging to their specific needs, and continuously testing different approaches. A/B testing is essential for identifying what resonates best with your target audience, allowing for data-driven adjustments to optimize conversion rates. Focus on showcasing value propositions and addressing potential objections. Remember, the goal is to build trust and encourage action, not just display products.

Optimizing Product Listings and Descriptions

Optimizing product listings and descriptions is key to attracting and converting potential customers. Clear and concise product descriptions are essential for highlighting key features and benefits. Include high-quality images and videos that showcase the product from various angles and in use. Detailed specifications, such as size, color options, and materials, are critical for informed purchasing decisions. Consider incorporating customer reviews and ratings to build trust and social proof.

The Role of A/B Testing in Dynamic Remarketing

A/B testing is a crucial component of optimizing dynamic remarketing campaigns. It allows for systematic comparison of different ad variations to identify which perform best. By testing different headlines, descriptions, images, and calls to action, you can pinpoint the most effective elements for your target audience. Track key metrics such as click-through rates (CTR) and conversion rates to gauge the success of each variation.

Google Dynamic Remarketing Ads are a powerful tool for re-engaging website visitors. They allow you to show personalized ads to people who’ve previously interacted with your site, boosting conversions. This approach is particularly effective when combined with strategies like the ones discussed in Tamara Kitic Yarovoy’s insightful piece on digital marketing tamara kitic yarovoy , emphasizing the importance of understanding your target audience.

Ultimately, mastering dynamic remarketing ads can significantly improve your ROI.

Consistent A/B testing ensures your ads remain relevant and compelling.

Google Dynamic Remarketing ads are a powerful tool for reaching potential customers. But to truly maximize your results, connecting with your Facebook audience is key. Leveraging the 5 ways add page fans facebook messenger contact list here will help you build a stronger relationship with your audience and ultimately drive more conversions. This, in turn, boosts the effectiveness of your dynamic remarketing campaigns by providing you with valuable insights into your audience’s preferences.

Best Practices for Compelling Calls to Action

Compelling calls to action (CTAs) are essential for encouraging conversions. Clear and concise CTAs guide the user towards the desired action, such as “Shop Now,” “Learn More,” or “Get a Quote.” Use strong verbs and action-oriented language to create urgency and encourage immediate action. Placement of the CTA within the ad is also important, ensuring it’s easily visible and accessible.

Make the CTA visually distinct from the rest of the ad copy.

Different Types of Ad Copy Variations

| Variation Type | Headline | Description | Call to Action |

|---|---|---|---|

| Highlighting a Sale | 50% Off! | Limited-time offer on select items. | Shop the Sale Now |

| Focusing on a Feature | Experience Superior Comfort | Ergonomic design for ultimate support. | Explore the Collection |

| Addressing a Pain Point | Tired of Clutter? | Organize your space with our storage solutions. | Find Your Perfect Solution |

| Emphasizing Scarcity | Only 10 Left in Stock | Don’t miss out on this exclusive item. | Order Yours Today |

These variations cater to different customer needs and motivations, enabling you to adapt your messaging to better engage with your audience. Testing these variations allows for the identification of the most effective approach for maximizing conversion rates.

Performance Measurement & Analysis

Dynamic remarketing campaigns, while powerful, need careful monitoring and analysis to ensure they’re delivering the desired results. Understanding key performance indicators (KPIs) and effectively interpreting the data are crucial for optimizing campaigns and maximizing return on investment (ROI). This section delves into the methods for measuring and analyzing the effectiveness of your dynamic remarketing efforts.Dynamic remarketing campaigns, like any marketing initiative, require a keen eye on performance metrics.

A deep dive into the data allows for informed adjustments, leading to more effective targeting, compelling creatives, and ultimately, higher conversion rates. Effective analysis is the key to identifying areas for improvement and refining strategies to achieve optimal results.

Key Performance Indicators (KPIs)

Understanding the relevant KPIs is essential for evaluating the success of your dynamic remarketing campaigns. This involves focusing on metrics that directly correlate with campaign goals, such as driving sales or increasing brand awareness. The right KPIs will highlight strengths and weaknesses, guiding you towards a more efficient strategy.

- Click-Through Rate (CTR): CTR measures the percentage of users who clicked on your dynamic remarketing ads after viewing them. A higher CTR suggests your ad copy and targeting are resonating with the audience. For example, a CTR of 5% indicates that 5 out of every 100 users who saw the ad clicked on it.

- Conversion Rate: This KPI tracks the percentage of users who completed a desired action, such as making a purchase or filling out a form, after clicking on your ad. A high conversion rate signifies effective ad copy, compelling offers, and targeted messaging. For instance, a conversion rate of 10% means that 10 out of every 100 clicks resulted in a conversion.

- Cost Per Acquisition (CPA): CPA is the average cost of acquiring a new customer through your dynamic remarketing campaign. A lower CPA indicates better efficiency and a more effective use of your advertising budget. A CPA of $20 means that for every new customer acquired, you spent $20 on advertising.

- Return on Ad Spend (ROAS): ROAS measures the revenue generated for every dollar spent on your dynamic remarketing campaign. A higher ROAS indicates a more profitable campaign. A ROAS of 3.5 signifies that for every dollar spent on advertising, you generated $3.50 in revenue.

Tracking and Analyzing Dynamic Remarketing Ad Effectiveness

Effective tracking and analysis of dynamic remarketing ads are vital for optimizing campaign performance. Tools provided by Google Ads and other analytics platforms allow for deep dives into the data, enabling the identification of patterns and trends that can lead to significant improvements.

- Use Google Ads Reporting Tools: Google Ads provides comprehensive reporting features to track key metrics like CTR, conversion rate, CPA, and ROAS. Utilizing these tools allows for the identification of trends and patterns within the data.

- Analyze User Behavior: Tracking user behavior after clicking on your dynamic remarketing ads, including the pages they visit and the products they engage with, offers valuable insights into their interests and preferences. Understanding these behaviors can help optimize your ad targeting and messaging.

- A/B Testing: A/B testing involves comparing different versions of your dynamic remarketing ads to identify which performs better. This testing can involve various elements, including headlines, descriptions, and calls to action. By testing different versions, you can continuously improve ad performance.

Interpreting Dynamic Remarketing Campaign Data

Data interpretation involves examining the insights gained from tracking and analyzing your dynamic remarketing campaign data. This analysis helps in identifying areas for improvement and refining the campaign strategy.

- Identify High-Performing Segments: Identifying customer segments that consistently convert well allows you to refine your targeting strategy to reach more similar users. This will increase the effectiveness of your campaign and maximize ROI.

- Examine Underperforming Ads: Understanding why certain ads or segments are underperforming allows you to identify areas for improvement. Analyzing these areas can involve scrutinizing the ad copy, targeting, or creative elements.

- Review Conversion Funnels: Analyzing the conversion funnel reveals where users are dropping out of the process. Addressing these drop-off points can significantly increase conversion rates.

Identifying Areas for Improvement

Identifying areas for improvement in dynamic remarketing campaign performance is crucial for maximizing ROI. Continuous optimization is key to success.

- Refine Targeting Criteria: Refining your targeting criteria based on analysis of high- and low-performing segments will help you reach the right audience with the right message.

- Optimize Ad Copy and Creatives: Analyzing data from A/B tests and user behavior can help optimize your ad copy and creative elements for better engagement and conversion rates.

- Adjust Budget Allocation: Adjusting budget allocation to focus on high-performing segments and ads can lead to improved ROI.

KPI Formulas, Google dynamic remarketing ads

| KPI | Formula |

|---|---|

| Click-Through Rate (CTR) | (Clicks / Impressions) – 100 |

| Conversion Rate | (Conversions / Clicks) – 100 |

| Cost Per Acquisition (CPA) | (Total Cost / Total Conversions) |

| Return on Ad Spend (ROAS) | (Revenue Generated / Total Cost) |

Advanced Strategies & Techniques

Dynamic remarketing campaigns offer a powerful way to reconnect with potential customers, but maximizing ROI requires advanced strategies. Going beyond basic retargeting, these techniques focus on personalization, optimization, and integration with other marketing channels. By understanding and implementing these strategies, businesses can achieve a higher return on their investment in dynamic remarketing.Advanced strategies unlock the full potential of dynamic remarketing by taking a more comprehensive approach.

This involves not just targeting previous visitors but also analyzing their behavior and tailoring the message accordingly. A key element is integrating dynamic remarketing with other marketing tools, providing a more cohesive customer experience.

Maximizing ROI through Advanced Techniques

Dynamic remarketing’s success hinges on understanding the customer journey and tailoring messages for maximum impact. This includes leveraging dynamic pricing, responding to feedback, and using retargeting lists effectively.

Using Retargeting Lists with Other Advertising Platforms

Integrating dynamic remarketing with other advertising platforms, such as social media or email marketing, creates a unified customer experience. For example, a user who abandons their shopping cart on a website can be retargeted on Facebook with a compelling ad showcasing the same product. This coordinated approach amplifies the impact of each channel. Combining retargeting lists from different platforms allows for a more granular understanding of customer behavior, enabling more targeted messaging.

Combining Dynamic Remarketing with Other Google Ads Features

Dynamic remarketing’s potential is enhanced by integrating it with other Google Ads features. Using features like Google Shopping or Google Display Network targeting, advertisers can create a more comprehensive and engaging customer experience. For example, a retailer could combine dynamic remarketing with Google Shopping campaigns to target customers interested in specific products. This cross-promotion allows for a more efficient use of advertising budget and increases the chances of conversion.

Implementing Dynamic Pricing in Dynamic Remarketing Campaigns

Dynamic pricing in dynamic remarketing allows for real-time adjustments based on market conditions, competitor pricing, and inventory levels. This can significantly improve conversion rates by offering customers the most attractive price possible. For example, an airline can adjust ticket prices based on demand, using dynamic remarketing to target users interested in specific routes with competitive pricing.

Identifying and Responding to Customer Feedback

Monitoring customer feedback, whether through reviews, surveys, or social media comments, is critical for refining dynamic remarketing campaigns. This feedback helps identify areas for improvement and tailor the messaging to better meet customer needs. For example, if customer reviews consistently highlight a need for better customer service, the dynamic remarketing campaign can emphasize this aspect.

Retargeting Strategies for Dynamic Remarketing

| Strategy | Description | Pros | Cons |

|---|---|---|---|

| Broad Retargeting | Targets a wide range of users who have interacted with the website. | Reaches a large audience; Relatively inexpensive | Less personalized; Potential for lower conversion rates |

| Interest-Based Retargeting | Targets users based on their browsing history and interests. | Higher conversion potential; More personalized | Requires more data; Potentially higher cost per click |

| Behavioral Retargeting | Targets users based on specific actions they took on the website, like abandoning a cart or viewing specific products. | Highly targeted; High conversion potential | Requires more complex setup; Can be expensive |

Case Studies & Examples: Google Dynamic Remarketing Ads

Dynamic remarketing campaigns offer a powerful way to re-engage website visitors and convert them into customers. Successful implementations often rely on a deep understanding of the target audience, well-defined campaign strategies, and rigorous performance analysis. Real-world examples showcase how different industries are leveraging this technique to maximize their return on investment (ROI).

Retail Success Stories

Retailers consistently find success with dynamic remarketing. By showcasing products viewed previously on the website, they trigger a sense of familiarity and encourage purchase decisions. For instance, a clothing retailer might display items from a customer’s abandoned cart on their social media feed, offering a discount to incentivize completion. This personalized approach significantly improves conversion rates by addressing a user’s specific interest.

E-commerce Campaign Strategies

Dynamic remarketing is particularly effective in the e-commerce sector. These campaigns often focus on product-specific ads, targeting users who have interacted with particular items on the website. A jewelry store, for example, could show customers a similar ring they viewed previously, accompanied by a special offer. This personalized approach is key to boosting sales in a competitive market.

Comparative Analysis: E-commerce vs. Travel

Comparing two campaigns—one from an e-commerce site selling electronics and another from a travel agency—highlights the adaptability of dynamic remarketing. The e-commerce campaign, focusing on specific products previously browsed, saw a 25% increase in conversion rates for similar items. The travel agency campaign, emphasizing destinations viewed or booked, resulted in a 15% increase in bookings. Both campaigns achieved positive outcomes, but the specifics of the target audience and campaign design dictated the variations in performance.

Industry Best Practices

Implementing dynamic remarketing effectively requires careful attention to several best practices. A crucial aspect is the use of high-quality product images and compelling descriptions in ads. Additionally, segmenting the audience into smaller, more manageable groups allows for more tailored messaging and offers. A well-structured campaign, employing accurate targeting, and frequent performance monitoring, ensures a high ROI. For instance, a well-performing campaign will likely show high conversion rates.

Final Wrap-Up

In conclusion, Google Dynamic Remarketing Ads is a powerful tool for e-commerce businesses seeking to increase conversions and boost ROI. By understanding the core functionalities, targeting strategies, and optimization techniques, you can create highly effective campaigns that resonate with your target audience and drive significant results. This guide provided a solid foundation for you to begin your journey into mastering Dynamic Remarketing.

Remember, continuous learning and adaptation are key to success in this ever-evolving digital landscape.