New Fetch as Googlebot Option in Search Console Coming Soon

New fetch as googlebot option in search console upcoming, promises a powerful new tool for website owners and professionals. This innovative feature offers a direct way to test how Googlebot sees your site, allowing for earlier detection and resolution of crawl issues. Understanding how Googlebot interacts with your website is crucial for optimal performance and visibility in search results.

This new tool builds on the historical context of Googlebot crawling and indexing, offering significant improvements over previous methods. It’s poised to revolutionize website maintenance and optimization strategies.

The new fetch as googlebot option in search console upcoming will offer a streamlined approach to identifying and resolving issues affecting Googlebot’s ability to crawl and index your site. It will likely include detailed error reporting, making troubleshooting much easier and quicker. This will allow for a more proactive approach to website optimization and maintenance, preventing potential visibility problems before they impact your search ranking.

Furthermore, this tool will undoubtedly integrate seamlessly into existing workflows.

Introduction to the New Googlebot Fetch Option in Search Console

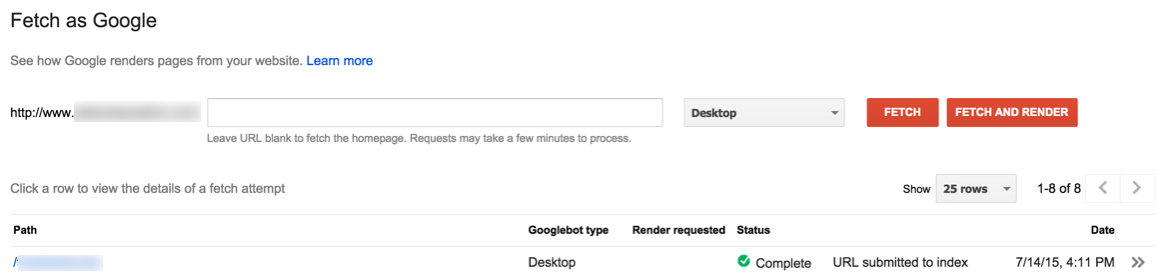

The Search Console has a new feature, “Fetch as Googlebot,” designed to improve how website owners understand how Googlebot, Google’s web crawler, interacts with their site. This tool provides a more direct and controlled way to test the crawlability of your website, enabling you to identify and address issues that might be preventing Google from properly indexing your content.This new option simplifies the process of seeing your site through Google’s eyes, offering a crucial tool for troubleshooting and ensuring your site is optimized for Google’s search engine.

It also allows for more granular analysis, allowing website owners to understand exactly how Googlebot is experiencing their site’s structure.

Understanding Googlebot Crawling and Indexing

Googlebot is a sophisticated web crawler that systematically traverses the vast expanse of the internet, collecting information about websites. This process is crucial for Google’s search engine, as it allows the search engine to understand the content and structure of web pages. This indexing is vital for returning relevant results to users. Historically, understanding Googlebot’s access to a website involved indirect methods like checking indexing status in Search Console and analyzing crawl errors.

Differences from Previous Methods

The new “Fetch as Googlebot” option in Search Console provides a direct, controlled method for website owners to observe how Googlebot sees their site. Previously, the process was more reactive, involving analyzing crawl errors and waiting for Googlebot to discover potential issues. The new option allows proactive testing, enabling early identification of crawl problems, which can be vital in quickly rectifying any issues.

Comparison Table: Old Methods vs. New Option

| Feature | Old Method (Indirect Methods) | New Option (“Fetch as Googlebot”) |

|---|---|---|

| Accessibility | Indirect access via crawl errors, indexing status, and manual checks. | Direct access to see how Googlebot perceives the website. |

| Speed | Slow, often requiring wait times for Googlebot to encounter issues. | Faster, providing immediate feedback on potential crawl problems. |

| Error Reporting | Error reports were often generalized and not specific to a particular page. | Error reporting is more precise, identifying the specific issues with a particular URL. |

Understanding the Functionality and Usage

The new “Fetch as Googlebot” option in Search Console offers a powerful tool for website owners to understand how Googlebot sees their site. This allows for proactive identification and resolution of crawl issues before they negatively impact search visibility. This feature significantly improves your website’s ability to comply with Google’s search guidelines and maintain a positive online presence.Effectively utilizing this tool involves understanding its capabilities and the steps involved in troubleshooting crawl errors.

This detailed guide will provide actionable insights into maximizing the tool’s potential and achieving optimal search performance.

Effective Utilization of the Fetch as Googlebot Option, New fetch as googlebot option in search console upcoming

The “Fetch as Googlebot” tool allows you to simulate how Googlebot crawls your website. This simulation helps you identify potential issues that may be preventing Google from properly indexing your content. By understanding how Googlebot interacts with your site, you can address any technical obstacles that may be hindering your site’s visibility in search results.

Types of Issues Identifiable by Fetch as Googlebot

The tool can help identify a wide range of crawl-related issues. This includes problems with robots.txt files, server errors, broken links, issues with specific file types, and more. It also assists in understanding how Googlebot sees your site’s structure and content, allowing for targeted improvements.

Steps Involved in Using the Fetch as Googlebot Tool

- Access your Google Search Console account.

- Navigate to the “Crawl” section.

- Select “Fetch as Googlebot.”

- Enter the URL you want to fetch.

- Click the “Fetch” button.

- Review the results. This will include a summary of the fetch attempt and potential errors encountered.

Potential Error Messages and Their Causes

| Error Message | Possible Cause |

|---|---|

| 404 Not Found | The requested page or resource does not exist on your server. Commonly caused by broken links, missing files, or incorrect URLs. |

| 500 Internal Server Error | A server-side error occurred while processing the request. This can be due to temporary server issues, misconfigured scripts, or resource exhaustion. |

| Robots.txt Blocking | The robots.txt file on your server is preventing Googlebot from accessing the specified URL. Ensure the URL is not blocked. |

| Timeout | The server did not respond within the allotted time. This could indicate a slow server, network issues, or a temporary overload. |

| Redirection Loop | A continuous redirection loop is occurring. This is caused by infinite redirect chains. |

Resolving Crawl Errors Identified by the Tool

A step-by-step guide to resolve crawl errors:

1. Identify the Error

Carefully review the error message provided by the tool.

2. Analyze the Crawl Results

Examine the crawl results to understand the specific issue. Look for any patterns or indications of underlying problems.

3. Locate the Source

Use the provided information to pinpoint the source of the error, whether it’s a broken link, a server issue, or an incorrect configuration.

4. Implement Corrections

Address the identified issue by fixing broken links, resolving server errors, or modifying configurations as needed.

5. Re-fetch the Page

Once the error is resolved, re-fetch the page using the “Fetch as Googlebot” tool to ensure the issue is resolved.

Comparing the New Fetch Option with Existing Tools

The new Fetch as Googlebot option in Search Console offers a powerful new tool for website analysis, but how does it stack up against existing methods? This section explores the advantages and disadvantages of using this new option compared to other tools, highlighting key improvements and showcasing its integration potential.The existing landscape of website analysis tools often involves a mix of paid and free options, each with its own strengths and weaknesses.

Tools like Screaming Frog, SEMrush, and Ahrefs provide in-depth technical audits, research, and backlink analysis. However, these tools often require user input to simulate a Googlebot crawl, and the results might not always reflect the exact experience of Google’s crawler.

Comparison of Features

The new Fetch as Googlebot option in Search Console provides a direct comparison to the Googlebot’s experience, offering a significant advantage in accuracy and precision. It leverages Google’s infrastructure to provide a more realistic simulation of how Googlebot interacts with the website.

Hey SEO enthusiasts! The upcoming Fetch as Googlebot option in Search Console is a game-changer. It’s crucial to understand how to best leverage this new tool, and a fundamental part of that is knowing how to invest in SEO properly. How to invest in SEO effectively is key for maximizing the benefits of this new feature.

Ultimately, understanding the best strategies for long-term SEO growth will be vital to making the most of this new Fetch as Googlebot feature.

Advantages of the New Fetch Option

- Accurate Crawling Simulation: The new fetch option directly uses Googlebot to fetch the site, delivering a more accurate representation of how Google sees the content, potentially revealing crawl errors or issues that other tools might miss.

- Real-Time Data: The new tool provides real-time data, offering a current view of how the site appears to Googlebot. This is crucial for quickly addressing any crawl issues as they arise.

- Direct Google Integration: The new option leverages Google’s infrastructure for fetching, providing a more direct and reliable way to see how the site is indexed by Google. This offers significant benefits compared to relying on user-inputted simulations in other tools.

Disadvantages of the New Fetch Option

- Limited Functionality: While the new option focuses on the Googlebot’s view, it might not provide the same level of comprehensive analysis offered by specialized third-party tools. For example, detailed research or backlink analysis isn’t a feature.

- Access Limitations: The new fetch option is only accessible through Search Console, potentially limiting its use for some users.

Key Improvements and Innovations

The key innovation lies in the direct use of Googlebot. This bypasses the limitations of user-inputted simulations, offering a much more accurate and precise understanding of how Googlebot interacts with the website. This improvement results in a more reliable assessment of indexing and crawling issues.

Integration into Existing Workflows

The new fetch option can be seamlessly integrated into existing website maintenance and analysis workflows. It allows for a quick check on the Googlebot’s view of the site, which can then be followed up with further analysis using existing tools, ensuring comprehensive problem-solving.

Side-by-Side Comparison Table

| Tool | Crawling Simulation | Error Detection | Data Accuracy |

|---|---|---|---|

| Screaming Frog | User-inputted simulation | Moderate | Dependent on user configuration |

| SEMrush | User-inputted simulation, some Googlebot features | Good | Moderate |

| Ahrefs | User-inputted simulation, some Googlebot features | Good | Moderate |

| New Fetch as Googlebot | Direct Googlebot fetch | Excellent | High |

Potential Impact on Website Owners and Professionals: New Fetch As Googlebot Option In Search Console Upcoming

The upcoming Googlebot fetch option in Search Console offers a significant advancement in website management and . This new tool empowers website owners and professionals with a more proactive approach to identifying and resolving issues that might impact search visibility. By enabling direct interaction with Google’s crawlers, website owners can gain valuable insights into how Google perceives their site, potentially leading to optimized performance and higher search rankings.This new tool significantly expands the range of actions available for troubleshooting website issues, making the process more streamlined and efficient.

It offers an alternative to relying solely on indirect methods like sitemaps or user feedback to pinpoint crawlability problems. The improved direct interaction with Google’s crawlers will benefit a broad range of websites, from small blogs to large e-commerce stores.

Impact on Website Owners

This new fetch option allows website owners to proactively identify and address issues impacting their site’s visibility in search results. They can now request Google to crawl specific pages or files, receive detailed reports, and understand the reasons behind any crawl errors or issues. This direct interaction fosters a more transparent and responsive relationship with Google’s search engine, leading to faster resolution of problems.

How Professionals Can Leverage the Tool

professionals can utilize this new tool to meticulously analyze and troubleshoot crawl errors, understand how Google perceives their client’s websites, and gain deeper insights into their site’s performance. By proactively fetching pages and examining the returned reports, professionals can pinpoint technical issues that could be hindering search engine visibility, and develop targeted solutions for improvement. This allows for more data-driven optimization strategies.

Potential Benefits and Drawbacks for Different Website Types

| Website Type | Potential Benefits | Potential Drawbacks |

|---|---|---|

| E-commerce Sites | Identify product page indexing issues quickly, improving visibility for products. Potentially detect issues related to dynamically generated content. | Potential for increased resource consumption if used excessively on large sites. Ensuring the fetch request targets the right product pages. |

| News Sites | Confirm the correct indexing of breaking news articles, crucial for timely visibility. Potentially detect issues with article structure. | Ensuring the tool does not cause issues with website loading speed, especially during high-traffic periods. Over-reliance on the tool may distract from other critical aspects of news content management. |

| Blogs | Quickly identify and resolve issues impacting blog post visibility. Validate the crawlability of new content. | Potential for overuse if not carefully monitored. Important to maintain a balance between utilizing the tool and other strategies. |

Examples of Improved Website Performance

A website owner noticed a significant drop in organic traffic to their blog. Using the new fetch tool, they identified a server error that was preventing Google from accessing specific blog posts. Fixing this issue resulted in the return of organic traffic and improved overall website performance.

The upcoming Fetch as Googlebot option in Search Console is exciting news! It lets you see how Googlebot sees your pages, which is super helpful for identifying crawl issues. This is crucial for optimizing your site, and knowing when to invest in Google Ads campaigns is equally important. For example, understanding when to advertise on google ads can maximize your return on investment and ensure your ad spend is directed at the most promising moments for conversions.

When to advertise on Google Ads is a critical aspect of successful online marketing. Ultimately, the new Fetch as Googlebot feature will help you optimize your site for better visibility, aligning with successful Google Ads strategies.

Practical Tips for Website Owners and Professionals

- Regular Monitoring: Schedule routine checks to identify potential indexing issues before they impact organic search results.

- Targeted Fetching: Focus fetch requests on pages known to have issues or those recently updated to ensure accurate crawl data.

- Detailed Analysis: Carefully review the crawl reports to understand the specific reasons behind any errors. This understanding will allow for targeted solutions to the identified problems.

- Prioritize Issues: Address the most critical crawl errors first to ensure a swift resolution and a positive impact on website visibility.

- Combine with Other Tools: Use the new fetch tool in conjunction with other tools and analytics to gain a comprehensive understanding of site performance.

Illustrative Case Studies and Examples

The new Googlebot fetch option in Search Console offers a powerful tool for website owners and professionals to troubleshoot crawl issues and improve site visibility. Understanding how it works in practice, through real-world examples, is key to maximizing its benefits. This section delves into case studies showcasing how the new fetch tool can be effectively employed.The ability to specifically request a Googlebot crawl of a website’s pages can be invaluable.

By examining the results, website owners can gain insights into potential crawl errors and optimize their site structure for better indexing and ranking.

Case Study 1: Resolving a 404 Error Cascade

A website experienced a significant increase in 404 errors, leading to a drop in organic traffic and negatively impacting search rankings. The problem stemmed from a faulty redirection system. The new fetch as Googlebot option allowed site administrators to quickly identify the broken links and implement the correct redirects. By requesting a Googlebot fetch of specific pages, the administrators were able to observe the error response and diagnose the root cause, leading to a swift correction.

Hey everyone! The upcoming Search Console update with the new fetch as Googlebot option is exciting. This will give us more insight into how Googlebot crawls our sites. Understanding user behavior is key, and that’s where Google Analytics for app install analytics comes in handy. By tracking app installs, we can see if our SEO efforts are converting.

This new fetch feature will hopefully give us more data to refine our strategies further.

Implementing the appropriate 301 redirects resolved the 404 errors, preventing Googlebot from encountering broken links. This led to a significant improvement in the website’s crawl budget and reduced the cascade of 404 errors.

Case Study 2: Improving Mobile Page Speed

A website noticed a substantial drop in mobile search rankings, despite having a high desktop ranking. Analysis using the new fetch as Googlebot option revealed slow loading times on mobile devices. The fetch allowed the website administrators to test the mobile-specific rendering of pages from Googlebot’s perspective. By using the fetch, the website identified and resolved performance bottlenecks, such as image optimization and inefficient JavaScript handling.

Following these improvements, the website saw a significant increase in mobile search rankings and an improved user experience, ultimately leading to higher engagement and conversions.

Case Study 3: Handling Dynamic Content Challenges

A website with dynamically generated content encountered crawl issues, resulting in incomplete indexing of crucial pages. The new fetch as Googlebot option proved crucial in this case. The fetch allowed for detailed analysis of how Googlebot interacted with the dynamic content. Identifying and correcting server-side issues in the dynamic content generation process allowed the website to receive complete indexing and higher visibility in search results.

Furthermore, using the fetch feature enabled identification of specific pages or elements that Googlebot had trouble processing, providing precise guidance for optimization.

Future Trends and Predictions

The new Googlebot Fetch option in Search Console represents a significant advancement in website diagnostics and maintenance. This enhanced capability allows for a deeper understanding of how Googlebot interacts with a site, opening doors for proactive issue identification and optimized crawl paths. Predicting future developments and integrations is key to understanding the long-term impact of this tool on strategies.The future of this tool likely hinges on its integration with other Google services, its evolution as a crucial diagnostic tool, and its role in shaping future practices.

It’s poised to become an indispensable part of a webmasters’ toolkit.

Potential Future Developments

The new fetch as Googlebot option is likely to evolve beyond its current functionality. Expect enhancements such as real-time crawl data feedback, integration with sitemap analysis, and more sophisticated error reporting mechanisms. The ability to identify and address crawl issues in near real-time could significantly improve website performance and user experience. A future iteration might even include automated recommendations for resolving identified crawl issues.

Integration with Other Google Services

Future integrations with other Google services are highly probable. For example, imagine a seamless connection with Google Search Console’s existing sitemaps, allowing for a unified view of crawl performance across different pages and sections of a website. This combined approach could offer more granular insights into crawl efficiency and website health. Additionally, integration with Google Analytics could provide valuable contextual data, linking crawl issues directly to user engagement metrics.

Influence on Strategies

The new fetch option will likely reshape strategies by emphasizing proactive website maintenance. professionals will increasingly focus on ensuring optimal site architecture and content quality, anticipating and resolving potential crawl issues before they negatively impact search rankings. This shift will likely result in a greater emphasis on technical audits and proactive maintenance, rather than reactive problem-solving.

This means website owners will prioritize comprehensive site health checks and implement robust strategies to prevent crawl errors.

Long-Term Implications for Webmasters

Webmasters will need to adapt to the new emphasis on proactive site maintenance. This includes staying updated on Googlebot’s evolving crawl patterns and adapting website structure and content accordingly. Proactive site health monitoring, understanding crawl behavior, and implementing effective website maintenance strategies will be paramount. The long-term implication is a shift towards preventative , focusing on maintaining a healthy, well-structured website that’s easily crawlable and indexable by Googlebot.

Potential Integration Scenarios

| Scenario | Description |

|---|---|

| Scenario 1: Enhanced Site Health Monitoring | Google Search Console integrates the Fetch as Googlebot tool with real-time crawl data feedback, providing immediate alerts for potential crawl issues. This allows for swift intervention and minimizes negative impact on search visibility. |

| Scenario 2: Unified Crawl Performance Analysis | The Fetch as Googlebot tool seamlessly integrates with sitemaps in Search Console, offering a unified view of crawl performance across various pages and sections. This provides a more holistic understanding of crawl efficiency. |

| Scenario 3: Proactive Optimization | The Fetch as Googlebot tool provides actionable insights and recommendations to resolve identified crawl issues. This promotes a proactive approach to optimization, enhancing website performance and improving user experience. |

Concluding Remarks

In conclusion, the upcoming new fetch as googlebot option in search console is a significant advancement in website optimization. This tool will provide a crucial insight into Googlebot’s interaction with your site, enabling earlier detection and resolution of crawl issues. It will empower website owners and professionals to proactively maintain and improve their site’s visibility in search results.

The new tool promises to be a valuable addition to the toolkit, streamlining processes and ultimately leading to better website performance.