How Do Search Engines Work? A Deep Dive

How do search engines work? This journey delves into the fascinating world of search engines, exploring the intricate processes behind finding information online. From the initial user query to the presentation of results, we’ll uncover the magic of these powerful tools. We’ll look at different types of search engines, the technical marvels of crawling and indexing, and the sophisticated algorithms that determine what results appear first.

Imagine a vast library containing billions of books, each with countless pages. A search engine is like a highly advanced librarian, instantly locating the precise information you need, regardless of its location within this massive collection. This is achieved through a complex interplay of factors, including sophisticated algorithms and meticulous indexing processes. Understanding these fundamentals is key to navigating the digital world effectively and understanding how to get the most out of search engines.

Introduction to Search Engines

Search engines are powerful tools that connect users with information across the vast expanse of the internet. They act as sophisticated librarians, cataloging and organizing web pages, images, videos, and other digital resources. Their fundamental purpose is to efficiently retrieve relevant results based on user queries. This allows users to find specific information quickly and easily, rather than having to manually browse through countless websites.Search engines are not limited to finding web pages.

They have evolved to encompass various types of information. Different types of search engines cater to different needs, such as finding images, videos, news articles, academic papers, and even specific files. This variety of specialized search engines ensures that users can find the precise information they are looking for.

Types of Search Engines, How do search engines work

Different types of search engines cater to different information needs. These include:

- Web Search Engines: These are the most common type, allowing users to find web pages containing specific s.

- Image Search Engines: These search engines allow users to find images based on s, descriptions, or image characteristics.

- Video Search Engines: These are designed to find videos based on s, topics, or creators.

- Academic Search Engines: These engines specialize in retrieving scholarly articles, research papers, and other academic content.

- News Search Engines: These focus on finding recent news articles from various sources.

User Interaction with a Search Engine

The user interaction with a search engine typically involves these steps:

- Formulating a Query: The user types a query into the search engine’s search bar, specifying the information they desire.

- Submitting the Query: The user initiates the search process by pressing the enter key or clicking a button.

- Processing the Query: The search engine analyzes the user’s query to understand the intent and relevant s.

- Retrieving Results: The search engine retrieves web pages, images, or other relevant information from its vast index.

- Ranking Results: The search engine ranks the retrieved results based on their relevance to the query, often using complex algorithms.

- Displaying Results: The search engine presents the ranked results to the user, typically in a list format, with links to the corresponding resources.

Search Query Processing

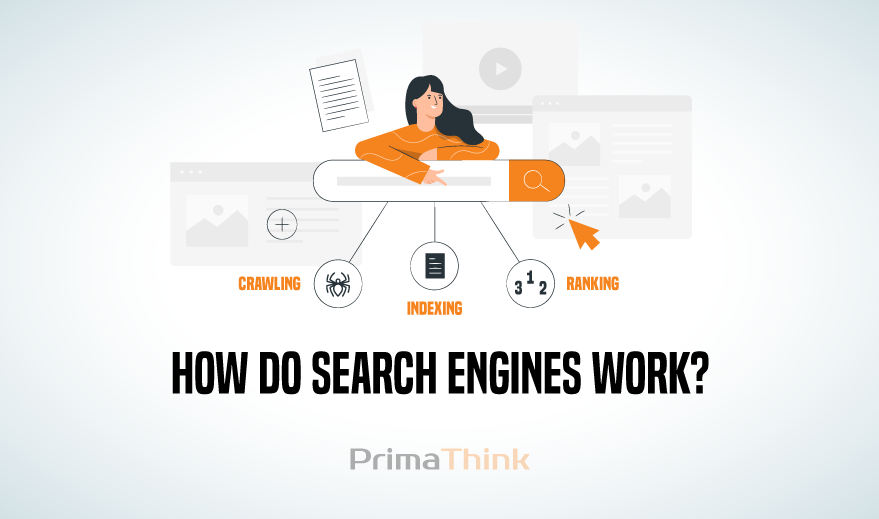

A search query is processed through a multi-step procedure. The steps involve:

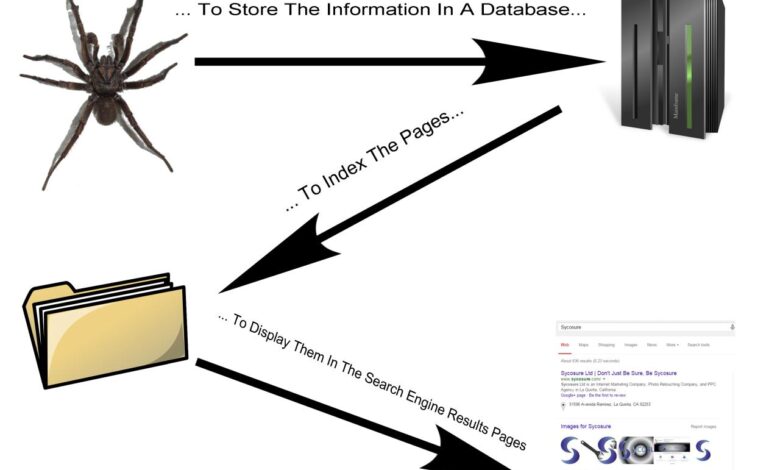

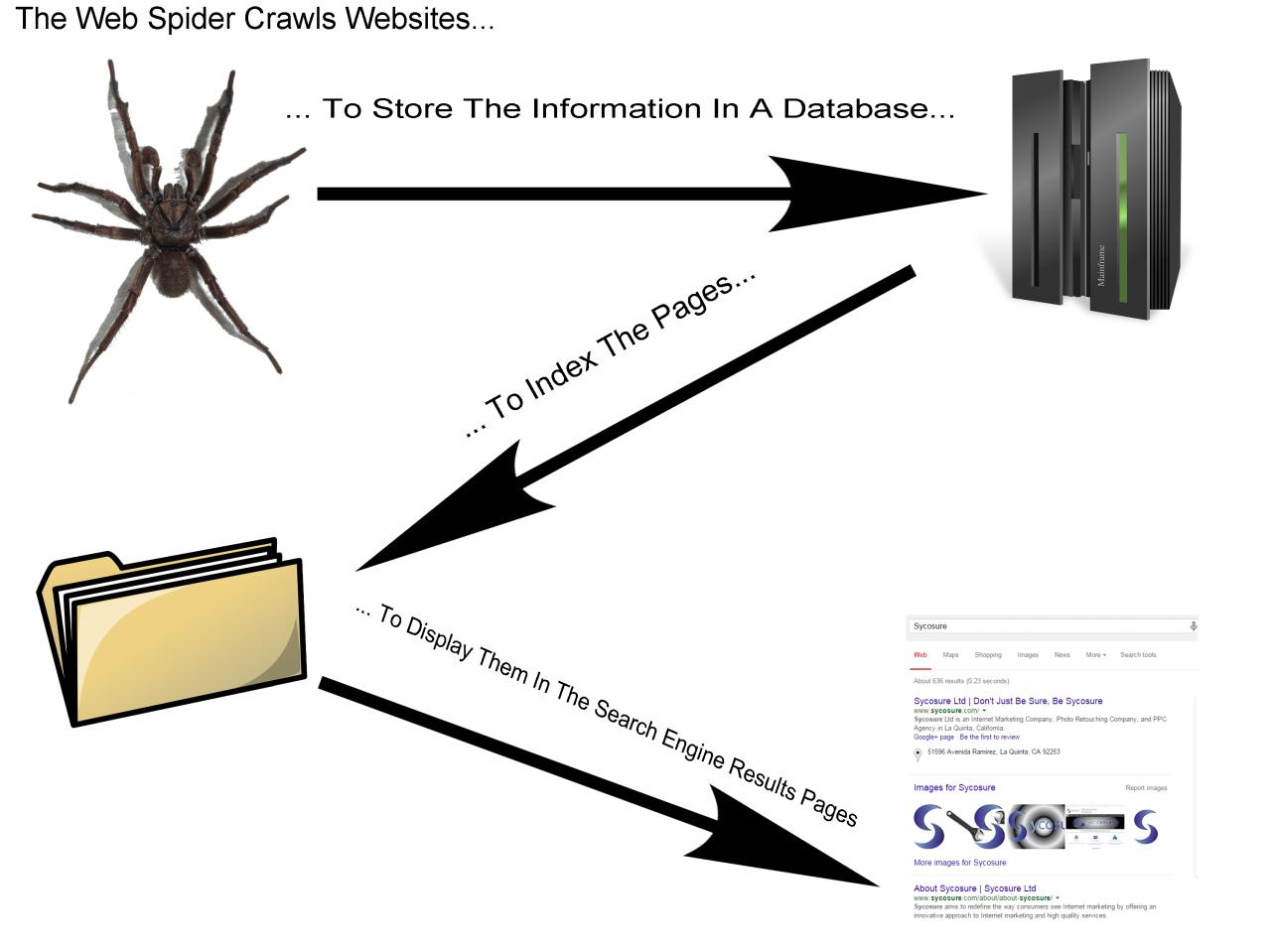

- Crawling: Search engine crawlers (also known as spiders) traverse the web, following links to discover new pages and update the index.

- Indexing: The discovered pages are processed, analyzed, and added to the search engine’s index, which is a massive database of web pages and their content.

- Query Processing: When a user submits a query, the search engine uses algorithms to understand the user’s intent and identify the most relevant pages.

- Ranking: Algorithms rank the indexed pages based on various factors, including relevance, authority, and freshness of the content.

- Displaying Results: The ranked results are presented to the user in a user-friendly format.

Flowchart of User Interaction

The flowchart would depict the user’s input, the search engine’s processing, and the output of ranked results.

Crawling and Indexing

Search engines are constantly updating their knowledge of the web. This crucial process relies on sophisticated algorithms and automated tools. The foundation of this process involves two fundamental steps: crawling and indexing. Crawlers meticulously explore the vast expanse of the internet, while indexing organizes the gathered information into a searchable database.The process of crawling and indexing ensures that search engines can deliver relevant results to users’ queries, connecting them to the specific information they seek.

This dynamic system is vital for navigating the ever-growing digital landscape.

Web Crawling Explained

Web crawlers, often called spiders, are automated programs that traverse the World Wide Web. They systematically follow links from one web page to another, mimicking the way humans browse the internet. This systematic exploration allows them to discover new pages and update their knowledge of existing ones. The process is essential for keeping the search engine’s database current and comprehensive.

Search engines are like massive, interconnected libraries, constantly crawling the web to index information. They use complex algorithms to understand the context of web pages, which is super helpful for finding what you need. This intricate process is crucial for discovering everything from the latest trends to fascinating niche topics like silent videos , a whole world of expression and storytelling.

Ultimately, these algorithms are designed to help you find relevant content, whether it’s a specific video or a comprehensive article on how search engines work themselves.

Web Crawler Functionality

Web crawlers play a critical role in gathering information. They are designed to follow hyperlinks, traversing the web’s intricate network of interconnected pages. Their actions are not arbitrary; they follow predefined rules and protocols to respect website owners’ requests. Crawlers also consider the structure of the website and the frequency of updates to determine the importance and relevance of each page.

Discovery and Access of Web Pages

The discovery of new web pages is a key function of web crawlers. They start with a list of known URLs and then follow the links found on those pages. This process continues recursively, expanding the scope of their exploration. The process is often influenced by factors like the page’s popularity, the frequency of updates, and the website’s structure.

The frequency with which a crawler visits a website is determined by various criteria, including the website’s importance and the frequency of changes.

Content Analysis and Processing

Once a web page is accessed, the crawler analyzes its content. This involves extracting text, images, and other media from the page. Crucially, this analysis is not merely about copying the content but about understanding the structure and context. The crawler extracts information from the page’s code, including meta descriptions and other relevant tags. This extraction allows the crawler to categorize and index the page’s content accurately.

Categorization of Web Content

Categorizing web content is crucial for efficient indexing. The process typically involves classifying content into various types:

- Text: Crawlers extract textual content from web pages, including headings, paragraphs, and other elements.

- Images: Images are identified and categorized based on their format and metadata, such as alt text.

- Videos: Similar to images, videos are identified by their format and metadata. This includes metadata like descriptions or titles.

This categorization is fundamental to indexing the diverse forms of online content.

Search Engine Database Index Structure

The structure of a search engine’s database index is complex but crucial for efficient searching. The index is essentially a massive database storing information about web pages.

| Field | Description |

|---|---|

| URL | Unique address of the web page. |

| Content | Extracted text, images, and other media. |

| Metadata | Information about the page, like title and description. |

| Links | List of links pointing to and from the page. |

| Ranking Factors | Metrics used to determine the page’s importance and relevance. |

The index is organized to facilitate quick retrieval of relevant results based on user queries. This structure allows search engines to provide comprehensive and accurate results to users’ search requests.

Search Algorithms

Search engines are far more than just simple matchers. They employ sophisticated algorithms to sift through billions of web pages and present the most relevant results. These algorithms are constantly evolving, keeping pace with the ever-changing landscape of the internet and user behavior. Understanding these algorithms is key to comprehending how search engines function and how to optimize your content for better visibility.

Ranking Algorithms

Search engines use a variety of ranking algorithms to determine the order of search results. These algorithms consider a multitude of factors, including the relevance of the content to the search query, the authority and trustworthiness of the website, and the user experience on the site. The specific weighting of these factors can differ significantly between search engines.

Relevance in Search Results

Relevance is paramount in search engine results. Search engines strive to match search queries with documents that contain the most pertinent information. This involves analyzing the s used in the query and identifying documents that use those s in a meaningful context. The quality and context of the content are essential for relevance. High-quality, well-written content with accurate information is more likely to be considered relevant.

Prioritizing Search Results

Search engines prioritize results based on a complex combination of factors. These factors often include the relevance score, the authority of the website, the user engagement metrics, and the freshness of the content. Results deemed more relevant, authoritative, and engaging are usually ranked higher. Recent updates and new information often get higher priority.

Handling Multiple Queries

Search engines are designed to handle numerous search queries simultaneously. They utilize parallel processing techniques and distributed systems to quickly process and respond to these requests. Modern search engines are able to manage millions of queries per second. This efficiency is critical for providing a seamless user experience.

Comparison of Two Search Algorithms

| Algorithm | Description | Key Factors | Strengths | Weaknesses |

|---|---|---|---|---|

| PageRank | An algorithm that determines the importance of a web page based on the number and quality of links pointing to it. | Number of backlinks, quality of linking sites, page content | Effective in identifying authoritative websites, relatively simple to understand. | Can be manipulated by creating artificial backlinks, may not fully capture the relevance of the content. |

| TF-IDF | An algorithm that measures the importance of a term in a document relative to a collection of documents. | Term frequency, inverse document frequency, density. | Excellent at identifying the relevance of specific terms within a document and across a corpus. | Can be affected by stuffing, may not fully capture the semantic meaning of a query. |

User Experience and Feedback

Search engines are constantly striving to improve the user experience. A good user experience isn’t just about having a visually appealing interface; it’s about providing relevant and accurate results in a timely manner. A positive user experience fosters trust and encourages repeat use. Users should feel confident that the engine is working efficiently and effectively to find the information they need.Search engines meticulously monitor user interactions to understand how users navigate their results and identify areas for improvement.

This understanding is crucial for optimizing the entire search process, from the initial query to the presentation of results. User feedback, in turn, provides valuable insights into user preferences and expectations, enabling engines to tailor their algorithms and enhance the overall search experience.

Search engines crawl the web, indexing pages and content. Understanding how they work is key to SEO. A crucial part of this process involves properly handling broken links, like 404 errors. This directly impacts how search engines interpret your site, and a solution to these errors is vital. For instance, exploring how images can be used to signify 404 errors in your webmaster tools, like can images serve 404 errors in webmaster tools , can significantly improve your site’s performance in search results.

Ultimately, this meticulous attention to detail helps search engines effectively index and rank your website, showcasing its content to users searching online.

Significance of User Experience

User experience (UX) is paramount in the success of any search engine. A positive UX fosters user trust and loyalty. Users are more likely to return to a search engine that provides accurate, relevant, and easily navigable results. A seamless and intuitive experience encourages frequent use, contributing to the platform’s overall growth and popularity.

Methods for Collecting User Feedback

Search engines employ various methods to gather user feedback, including clickstream analysis, query logs, and user surveys. Clickstream data reveals user behavior patterns, such as which links are clicked on, how long users stay on a page, and which search results are ignored. Query logs provide insights into the types of queries users submit, identifying trends and patterns in search behavior.

Surveys directly solicit feedback from users about their search experience, providing qualitative data that complements the quantitative insights from clickstream and query logs.

Analysis of User Feedback

The collected feedback is analyzed to identify areas where the search engine can improve. For example, if users consistently click on results from a particular website, the search engine may adjust its algorithm to prioritize similar websites in future searches. If users find certain search results irrelevant, the engine may refine its indexing and ranking procedures. User feedback data is crucial for identifying shortcomings and opportunities for enhancement in the search experience.

Adaptation to User Behavior

Search engines continuously adapt to user behavior. As search trends evolve, the engines modify their algorithms to reflect these shifts. This adaptability is critical to maintaining the relevance and accuracy of search results. If a particular topic gains significant popularity, the search engine may adjust its indexing strategies to accommodate this new trend. For example, the rise of social media has influenced how search engines handle user-generated content.

Personalization in Search Results

Personalization in search results is becoming increasingly important. Search engines use data about a user’s past searches and browsing history to tailor results to their specific interests. This personalization enhances the relevance of results, providing users with information more likely to be valuable to them. For instance, if a user frequently searches for information about a particular hobby, the search engine may prioritize results related to that hobby in future searches.

Methods of User Feedback Collection

| Method | Description | Strengths | Weaknesses |

|---|---|---|---|

| Clickstream Analysis | Tracks user interactions with search results, such as clicks, scrolls, and time spent on pages. | Provides quantitative data on user engagement and preference. | Limited to observable actions; doesn’t capture user motivations or frustrations. |

| Query Logs | Records the search queries submitted by users. | Identifies trends and patterns in search behavior. | Doesn’t directly assess user satisfaction with results. |

| User Surveys | Directly asks users for feedback on their search experience. | Provides qualitative insights into user perceptions and frustrations. | Can be time-consuming and expensive to implement. Response bias may influence results. |

Advanced Search Techniques: How Do Search Engines Work

Unleashing the full potential of search engines goes beyond simple searches. Advanced search operators empower users to refine their queries, achieving more precise and relevant results. Mastering these techniques allows you to navigate the vast digital landscape with greater efficiency and uncover hidden gems of information.

Advanced Search Operators

Advanced search operators are special s or symbols that modify how a search engine interprets your query. They enable you to specify criteria, filter results, and target particular types of content, leading to more focused and insightful search results. This precision is crucial when dealing with massive datasets and intricate information.

Using Quotation Marks

Using quotation marks around a phrase forces the search engine to find results containing the exact phrase in the specified order. This is invaluable for locating precise wording, avoiding overly broad results, and identifying specific concepts. For example, searching for “best Italian restaurants in Rome” will yield different results than searching for “best Italian restaurants” or “restaurants in Rome”.

Using the Minus Sign (-)

The minus sign (-) excludes specific terms from your search results. This is a powerful tool for filtering out unwanted information. For example, searching for “best smartphones -Samsung” will exclude results mentioning Samsung smartphones, allowing you to focus on other brands.

Using the Asterisk (*)

The asterisk (*) acts as a wildcard character, substituting for one or more characters. This allows for flexibility when searching for variations of a word or phrase. For example, searching for “natur* parks” will retrieve results for “nature parks”, “natural parks”, and similar variations.

Using Site Search Operators

Site-specific searches allow you to limit results to a particular website or domain. This is incredibly helpful for finding specific information within a known source. For example, searching for “site:wikipedia.org history of computers” will restrict results to articles about the history of computers on Wikipedia.

Using Boolean Operators (AND, OR, NOT)

Boolean operators refine searches by specifying relationships between s. “AND” requires both terms to appear in the results, “OR” allows either term to appear, and “NOT” excludes results containing a specific term. For example, searching for “jaguar AND car” will only return results containing both terms.

Handling Complex Queries

Search engines employ sophisticated algorithms to process complex queries, factoring in the relationships between search terms. These algorithms are designed to interpret the intent behind the query and prioritize relevant results. While the exact algorithms are proprietary, the underlying principles involve matching, context analysis, and ranking methodologies.

Customizing Search Queries

The potential for customization in search queries is significant. Users can utilize a combination of operators to achieve highly specific and focused results. Experimentation and practice are key to discovering the optimal search strategies. The combination of these techniques leads to an improved search experience.

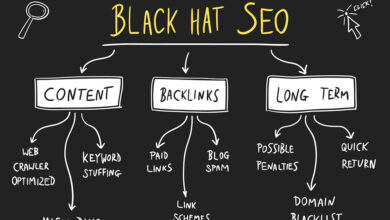

Search Engine Optimization ()

Search Engine Optimization () is the practice of enhancing a website’s visibility in search engine results pages (SERPs). A higher ranking in SERPs translates directly to more organic traffic, which is crucial for any website aiming to reach a wider audience and achieve its business objectives. Understanding how search engines work is fundamental to effective strategies. is not a one-time fix but an ongoing process that requires adapting to the evolving algorithms and user behavior patterns of search engines.

Success in hinges on understanding and implementing effective strategies that align with search engine guidelines and user intent.

Importance of in Relation to Search Engines

Search engines prioritize websites that provide relevant and valuable content to users. Effective strategies help search engines understand the context and value of a website’s content, making it easier for the search engine to present it to users searching for relevant information. Websites that are not optimized for search engines often get buried in the search results, leading to a loss of potential visitors.

Content Optimization for Search Engine Visibility

Content optimization plays a pivotal role in improving a website’s visibility. Creating high-quality, informative, and engaging content that addresses user queries is paramount. The content should be well-structured, using headings, subheadings, and bullet points to improve readability. research is crucial to identify the terms users are searching for, and strategically incorporating these s naturally within the content enhances the website’s relevance to search queries.

This leads to higher rankings and increased organic traffic.

Website Optimization Techniques for Search Engines

Several techniques contribute to website optimization. Technical optimization focuses on aspects like site speed, mobile-friendliness, and security (HTTPS). High-quality backlinks from reputable websites indicate the credibility and authority of a site, thus improving its ranking. A well-structured site architecture with clear navigation makes it easier for search engines to crawl and index the content. All these elements collectively contribute to a user-friendly and search-engine-friendly website.

Search engines crawl the web, indexing billions of pages to deliver relevant results. Understanding how this works is key, and often overlooked when considering a pay-per-click company like look pay per click company. They use sophisticated algorithms to analyze keywords and user intent, ensuring the most helpful information appears first. Ultimately, understanding search engine function is vital for effective online strategies.

- Site Speed: Page load time is a critical factor in user experience and search engine rankings. Slow loading websites lead to high bounce rates, impacting rankings. Optimizing images, leveraging caching mechanisms, and choosing a fast hosting provider are essential steps to enhance site speed.

- Mobile-Friendliness: The increasing use of mobile devices necessitates websites to be mobile-friendly. A responsive design ensures a seamless experience across different screen sizes, contributing to improved rankings.

- Backlinks: High-quality backlinks from authoritative websites signal trust and relevance to search engines. Building a strong backlink profile through guest blogging, content marketing, and social media promotion can significantly improve a website’s ranking.

- Structured Data Markup: Using structured data markup, like Schema.org, helps search engines understand the content of a webpage, leading to rich snippets in search results. This improves click-through rates and visibility.

Impact of Different Strategies

The impact of different strategies varies depending on the specific strategy, the website, and the competitive landscape. Some strategies might yield quick results, while others may take longer to show significant improvements. A holistic approach that combines various strategies often yields the best results.

Comparison of Strategies

| Strategy | Description | Impact |

|---|---|---|

| On-Page Optimization | Optimizing website content, meta descriptions, and HTML elements for search engines. | Improves relevance, readability, and site structure. |

| Off-Page Optimization | Building backlinks from other reputable websites. | Increases website authority and trustworthiness. |

| Technical | Improving website performance, security, and mobile-friendliness. | Enhances user experience and search engine crawlability. |

| Content Marketing | Creating valuable content to attract and engage target audiences. | Builds brand authority, attracts backlinks, and drives organic traffic. |

Security and Privacy Concerns

Search engines are powerful tools, but their operation raises significant security and privacy concerns. Users entrust them with vast amounts of personal data, and the potential for misuse or breaches is a constant concern. Maintaining user trust requires a multi-faceted approach, addressing both the security measures employed by search engines and the privacy concerns surrounding their operation.Protecting user data and ensuring the integrity of search results are paramount to the long-term viability of these services.

Robust security measures, transparent privacy policies, and a commitment to user feedback are essential to mitigating these concerns and building public confidence.

Security Measures Employed by Search Engines

Search engines implement various security measures to protect user data and maintain the integrity of their systems. These measures aim to prevent unauthorized access, protect against malicious attacks, and safeguard the data entrusted to them. Robust authentication protocols and regular security audits are crucial elements in this process.

- Data Encryption: Search engines use encryption to protect user data during transmission. This ensures that sensitive information, such as search queries and personal details, cannot be intercepted by unauthorized parties. HTTPS protocols are commonly used to encrypt data exchanged between the user’s browser and the search engine’s servers.

- Access Control Mechanisms: Strict access control measures are in place to limit access to sensitive data. Only authorized personnel have access to critical systems and user information. Multi-factor authentication and role-based access control are common security measures used to prevent unauthorized access to sensitive data.

- Regular Security Audits: Continuous security audits are conducted to identify vulnerabilities and potential weaknesses in the search engine’s infrastructure. This proactive approach helps to mitigate risks and ensure that systems are resilient to potential attacks. These audits often involve penetration testing and vulnerability scanning.

Privacy Concerns Related to Search Engine Use

Concerns about user privacy are central to the use of search engines. Users share their search queries and browsing history, creating a detailed profile of their interests and preferences. The collection and use of this data raise concerns about potential misuse and the potential for discrimination or targeted advertising.

- Data Collection and Usage: Search engines collect vast amounts of user data, including search queries, browsing history, location data, and interactions with ads. Transparency in data collection practices and clear policies on how this data is used are crucial for building trust.

- Targeted Advertising: The use of collected data for targeted advertising raises concerns about privacy violations. Users may feel uncomfortable with their online behavior being used to tailor ads, and this may impact their trust in search engines.

- Data Security Breaches: The risk of data breaches is a significant concern. Data breaches can expose sensitive user information to malicious actors, leading to identity theft, financial losses, and reputational damage.

Mechanisms for Protecting User Data

Robust mechanisms are essential for protecting user data. These mechanisms include data minimization, anonymization, and secure storage. These practices are designed to limit the collection of data to only what is necessary and to ensure that data is handled responsibly.

- Data Minimization: Only the data required for specific purposes should be collected. This minimizes the risk of misuse and enhances user privacy. For instance, a search engine should not collect more data than is necessary to provide search results.

- Anonymization: User data should be anonymized wherever possible. This means removing personally identifiable information, such as names and addresses, to protect user privacy. Techniques like pseudonymization can be used to further enhance user privacy.

- Secure Storage: Data should be stored in secure environments to prevent unauthorized access. Data encryption and access controls are crucial to protecting data from breaches.

Challenges in Maintaining User Privacy

Maintaining user privacy in the digital age presents significant challenges. The sheer volume of data collected, the evolving nature of technology, and the potential for malicious actors to exploit vulnerabilities are among the key hurdles.

- Data Volume and Complexity: The sheer volume of data collected by search engines makes it challenging to manage and protect. The complexity of data storage and processing systems also poses a significant challenge.

- Evolving Technology: New technologies and techniques are constantly emerging, making it challenging to stay ahead of potential threats. The constant evolution of hacking methods and malicious software requires continuous adaptation of security measures.

- Malicious Actors: Malicious actors are constantly seeking ways to exploit vulnerabilities and gain unauthorized access to user data. Advanced cyberattacks and sophisticated phishing techniques make maintaining security a continuous effort.

Methods for Ensuring Search Results are Not Manipulated

Search engines employ various methods to ensure that search results are not manipulated or influenced by external actors. This includes rigorous quality control measures, algorithms designed to identify and penalize manipulation attempts, and transparent reporting mechanisms.

- Algorithm Design: Search algorithms are designed to identify and penalize websites engaged in manipulative practices. These algorithms are constantly evolving to adapt to new manipulation techniques.

- Quality Control Measures: Search engines implement quality control measures to ensure that the results returned are accurate, relevant, and trustworthy. This includes evaluating the content of websites and assessing their authority.

- Transparency and Reporting: Search engines should provide transparency on their processes and allow users to report potential manipulation attempts. Clear reporting mechanisms are essential for addressing user concerns and improving the quality of search results.

Security Measures Implemented by Major Search Engines

| Search Engine | Data Encryption | Access Control | Security Audits |

|---|---|---|---|

| HTTPS, End-to-End Encryption | Multi-factor authentication, Role-based access | Regular penetration testing, Vulnerability scanning | |

| Bing | HTTPS, Encryption Protocols | Strong access controls, Multi-factor authentication | Regular security assessments, Penetration testing |

| DuckDuckGo | HTTPS, Encryption Protocols | Strict access control, Role-based access | Security audits, Vulnerability scans |

Final Wrap-Up

In conclusion, understanding how search engines function provides valuable insight into the digital landscape. From the initial crawl to the final result, a meticulous process guides users to the most relevant information. This intricate system, powered by sophisticated algorithms, ensures a streamlined search experience. Knowing how search engines work empowers users to harness their power, and also provides a deeper appreciation for the technology behind our daily online activities.

Further, understanding the inner workings can also inform strategic approaches to optimize content for search visibility.