Duplicate Content Googles SEO Handling

Duplicate content how does google handle duplicate content for seo – Duplicate content how does Google handle duplicate content for ? This deep dive explores the intricacies of duplicate content, its various forms, and Google’s strategies for identifying and dealing with it. We’ll uncover how unintentional and intentional duplication affects search rankings, and provide practical solutions for avoiding and rectifying these issues.

Understanding how Google’s algorithms detect and process duplicate content is crucial for maintaining a healthy strategy. This involves knowing the different types of duplication, from identical copies to near-identical versions and derivative works. We’ll also look at the impact on search rankings, potential penalties, and how to avoid these pitfalls through proper website architecture and content creation.

Understanding Duplicate Content: Duplicate Content How Does Google Handle Duplicate Content For Seo

Duplicate content is a significant concern, as search engines aim to provide unique and valuable content to users. Duplicate content, in its various forms, can confuse search engines and potentially harm a website’s search ranking. Understanding the different types and causes of duplicate content is crucial for website owners and content creators to maintain a healthy online presence.Duplicate content, in its various forms, can confuse search engines and potentially harm a website’s search ranking.

Google’s got a pretty strict policy on duplicate content, impacting SEO. Knowing how to find new, valuable keywords, like with find new profitable SEO keywords in under 2 minutes , is key to avoiding those issues. Ultimately, unique content is crucial for good SEO, so finding fresh keywords that aren’t already overused is vital for success in search results.

Understanding the different types and causes of duplicate content is crucial for website owners and content creators to maintain a healthy online presence. This discussion will delve into the intricacies of duplicate content, helping you grasp the nuances and avoid pitfalls.

Definition of Duplicate Content

Duplicate content refers to content that is substantially similar or identical to other content already available online. This similarity can manifest in various ways, from nearly identical text to derivative material. Search engines strive to identify and penalize websites that utilize duplicate content, as it negatively impacts the user experience and undermines the search results’ quality.

Types of Duplicate Content

Different forms of duplicate content exist, each with varying degrees of impact on search engine rankings. Understanding these differences is crucial for addressing the issue effectively.

- Identical Content: This involves the exact duplication of content on different web pages or across multiple websites. This is a clear case of duplicate content, as it offers no unique value to users and search engines. A common example of identical content is when a website unintentionally copies content from another website or when a site publishes the same content on multiple pages.

- Near-Identical Content: Near-identical content involves significant similarity between pieces of content, often with minor alterations or rewording. The degree of similarity might be enough to mislead users, but not to the point of being a direct copy. This type of duplication can arise from content rewrites or paraphrasing without significant modification.

- Derivative Content: This encompasses content that’s based on existing content but re-expressed in a different format or style. It could involve translating content into another language or transforming it from a blog post into an article. This is often more challenging to detect than identical or near-identical content because the fundamental information is similar, but the form is different.

Causes of Duplicate Content

Duplicate content can arise from both intentional and unintentional actions. Understanding these causes is essential for proactive measures to prevent the issue.

- Unintentional Duplicate Content: This can occur due to technical errors, such as content appearing on multiple pages due to a flawed website structure. Website owners might not even realize they have duplicated content. Another unintentional example includes publishing content on multiple platforms without careful consideration of content uniqueness.

- Intentional Duplicate Content: This type of duplicate content is created intentionally, often with the aim of increasing website traffic or search engine rankings. This can include creating multiple sites that essentially publish the same content, which can be harmful to . Black-hat techniques often involve this type of duplication.

Comparison of Duplicate Content Types

The table below summarizes the different types of duplicate content, highlighting their definitions, examples, and impact on search engine rankings.

| Type | Definition | Examples | Impact on Search Ranking |

|---|---|---|---|

| Identical Content | Exact duplication of content across multiple pages or websites. | Republishing an article on two different pages of the same website without any modification. | Significant negative impact; search engines penalize sites with identical content. |

| Near-Identical Content | Content with significant similarity, often due to rewording or minor alterations. | Paraphrasing an article from another website, keeping the same structure and most of the content. | Moderate negative impact; search engines might flag the content. |

| Derivative Content | Content based on existing content but re-expressed in a different format or style. | Translating an article from English to Spanish. | Less negative impact if done correctly; however, maintaining uniqueness is still important. |

Google’s Approach to Duplicate Content

Understanding duplicate content is crucial for success. Duplicate content, whether intentional or unintentional, can negatively impact a website’s search engine rankings. Google’s sophisticated algorithms are designed to identify and handle this issue, aiming to provide users with high-quality, unique content.Google’s approach to duplicate content is multifaceted, encompassing various techniques to detect and categorize different forms of duplication.

This involves intricate analyses of content across the web, aiming to provide a user-friendly search experience by presenting the most relevant results.

Google’s Algorithms for Detecting Duplicate Content

Google employs a complex network of algorithms to identify duplicate content. These algorithms examine various factors, including textual similarity, code structure, and even the context surrounding the duplicated content. The algorithms look for instances where significant portions of text or code are identical across different web pages. This approach allows Google to identify instances of outright duplication, as well as subtle variations designed to circumvent detection.

Differentiating Intentional and Unintentional Duplicate Content

Google’s algorithms attempt to distinguish between intentional and unintentional duplication. Intentional duplication, such as content scraping or plagiarism, is often penalized more severely than unintentional duplication, such as unintentional mirroring or similar formatting. Factors like the source of the duplication and the context surrounding it help Google’s algorithms to make these distinctions.

Google’s Strategies for Handling Various Forms of Duplicate Content

Google employs several strategies to handle different types of duplicate content. For example, if a website mirrors its content across various URLs, Google might choose one canonical version as the authoritative source. This process ensures that search results don’t display the same content multiple times, improving user experience. Other strategies include analyzing the content’s context and quality to determine the best representation of the information.

- Canonicalization: Google identifies the canonical or primary version of a page and directs search engine crawlers to that version, preventing indexing of duplicate copies.

- Content Quality Evaluation: Google evaluates the quality and relevance of content. Duplicate content with higher quality and more relevant information is favored over lower-quality duplicates.

- Contextual Analysis: Google analyzes the context surrounding the duplicated content to determine its nature. If the duplication is part of a broader, well-structured website, it may be handled differently from duplication on a site with poor structure.

Flowchart of Google’s Duplicate Content Processing

A simplified flowchart illustrating the process of duplicate content detection and handling would start with Googlebot crawling a website. Next, the algorithm compares the crawled content with other indexed content. If substantial duplication is detected, Google evaluates the quality and context of the content. Based on the evaluation, Google may choose a canonical URL, deduplicate the content, or penalize the website.

The process aims to present the most relevant and unique content to users.

Google’s algorithm is pretty smart about duplicate content, penalizing sites with identical or near-identical pages. This is crucial for SEO, as it ensures that high-quality, unique content gets the visibility it deserves. Understanding how to avoid duplicate content issues, especially when using Facebook automated ads to promote your site, is key to ranking well. Facebook automated ads can be a powerful tool, but if not managed correctly, can lead to duplicate content problems.

So, always double-check that your Facebook ads aren’t inadvertently creating duplicate content issues, ensuring that your efforts are optimized for success in search results.

Impact of Duplicate Content on

Duplicate content significantly harms a website’s search engine optimization () efforts. It confuses search engines, leading to lower rankings and diminished visibility. This can ultimately impact website traffic and revenue. Search engines aim to provide users with unique and relevant content, and duplicate content often fails to meet these criteria.

Negative Consequences on Search Rankings

Search engines prioritize original content. Duplicate content, whether intentionally or unintentionally created, undermines this principle. When search engines encounter identical or near-identical content across multiple pages, they struggle to determine which version is most valuable and relevant to a user’s search query. This ambiguity often results in lower rankings for all affected pages. In essence, search engines devalue pages with duplicate content, potentially demoting them to less prominent positions within search results.

Penalties for Duplicate Content Violations

Google, the dominant search engine, may impose penalties for substantial duplicate content violations. These penalties can manifest in various ways, including lower rankings, reduced visibility, or even complete removal from search results. Penalties are typically more severe when the duplicate content is substantial and intentionally created for malicious purposes, like link manipulation or stuffing. This suggests that the quality and intent behind the duplication play a crucial role in the severity of the penalty.

Examples of Harm to Website Visibility, Duplicate content how does google handle duplicate content for seo

Duplicate content can harm a website’s visibility in numerous ways. A common example is when a website mirrors content from another source without proper attribution. This not only dilutes the site’s unique value but also exposes it to potential penalties. Another instance involves dynamically generated content that unintentionally produces duplicate versions of the same page for different parameters.

These situations illustrate how seemingly innocent errors or design choices can negatively impact .

Correlation Between Duplicate Content and Ranking Positions

The following table illustrates a hypothetical correlation between the level of duplicate content and potential search ranking positions. It highlights the negative impact on visibility as the duplication increases.

| Duplicate Content Levels | Potential Rankings | Impacts |

|---|---|---|

| Minor Duplication (e.g., small sections of text copied from other sources) | Potentially slightly lower rankings | Reduced visibility; minor loss of traffic |

| Moderate Duplication (e.g., significant portions of content duplicated) | Lower rankings, possible demotion | Significant loss of traffic; potentially affecting conversions |

| High Duplication (e.g., entire pages copied) | Very low rankings, possible removal from search results | Severe loss of traffic; potentially irreversible damage to |

Strategies to Avoid Duplicate Content

Preventing duplicate content is crucial for maintaining a healthy website and achieving optimal search engine rankings. Duplicate content can significantly harm a website’s performance, as search engines struggle to determine which version of the content to index and display. Implementing effective strategies for avoiding duplicates ensures a positive user experience and maximizes the impact of your website’s content on search results.Effective strategies for preventing duplicate content on a website involve careful planning and execution throughout the content creation and website architecture phases.

This proactive approach will save significant headaches and ensure your website remains a valuable resource for users and search engines.

Website Architecture Best Practices

Careful website structuring plays a vital role in avoiding duplicate content. Poorly designed architecture can lead to numerous duplicate pages, diluting the impact of your original content and confusing search engines. Implementing proper canonicalization, using unique URLs, and preventing redirection loops are critical steps in this process.

- Canonicalization: Implementing canonical tags on pages with duplicate content is essential. This tells search engines which version of the page should be considered the primary one, helping to avoid indexing multiple identical versions. This is crucial for handling different URL structures that might point to the same content.

- Unique URLs: Ensure every piece of content has a unique URL. Avoid using parameters or query strings that unnecessarily alter URLs without changing the content. For example, avoid URLs that include date parameters or pagination numbers if the content is identical. Each piece of content should have its own distinct address.

- Avoid Redirection Loops: Implementing redirect chains or loops can cause duplicate content issues. Ensure that redirects are concise and point directly to the intended page, without unnecessary intermediaries. Regularly check and maintain redirect chains to prevent issues.

Content Creation Guidelines

Creating unique and valuable content is paramount to avoiding duplicate content issues. Consistency in content creation, coupled with originality, can help prevent instances of plagiarism or near-duplicates.

- Original Content: Prioritize creating original content. Avoid copying content from other websites or rephrasing existing content without substantial modifications. Focusing on unique perspectives and angles is essential.

- Content Variety: Diversify the type of content you produce. Include a range of formats, including blog posts, articles, videos, and infographics. This diverse approach will prevent your website from appearing repetitive to search engines.

- Regular Updates: Updating existing content regularly can help to prevent issues with duplicate content. By adding new information, modifying existing text, or adding new sections, you demonstrate that your content is active and valuable.

Content Optimization for Unique Indexing

Optimizing your content for unique indexing is a vital part of preventing duplicate content issues. The following strategies will ensure that search engines can easily identify the distinct value of each piece of content.

- Unique Content: Ensure that every piece of content is unique in its structure, tone, and message. While utilizing similar themes or ideas is acceptable, ensure that the actual content is distinct and valuable to the reader.

- Freshness: Content freshness is important for search engines. Regularly updating content with new information or perspectives demonstrates its relevance and prevents it from being perceived as stale or redundant.

- Varying Perspectives: Present information from diverse perspectives or angles to add originality and depth to your content. This helps in differentiating your content from other sources.

Handling Existing Duplicate Content

Dealing with duplicate content on an existing website can be a complex challenge. Ignoring these issues can lead to significant drops in search engine rankings and a less effective online presence. A proactive approach to identifying and fixing these problems is crucial for maintaining a healthy website and organic traffic.Addressing duplicate content requires a systematic approach that involves identifying the problem areas, understanding the root cause, and implementing appropriate solutions.

This process often necessitates technical expertise and careful consideration of the website’s architecture and content strategy.

Identifying Duplicate Content

Identifying duplicate content on a website is the first step in remediation. Several methods can be employed, ranging from simple manual checks to sophisticated automated tools. Manual review, while time-consuming, can be effective for smaller sites. Larger sites require more robust methods.

- Manual Inspection: Carefully review content across different pages to identify similar or identical text blocks. This approach is effective for smaller websites, but it becomes inefficient for extensive content repositories.

- Automated Tools: Various software tools are available to automate the process of detecting duplicate content. These tools can compare content across different pages and highlight similarities. Tools like Copyscape and Siteliner provide automated analysis and reporting.

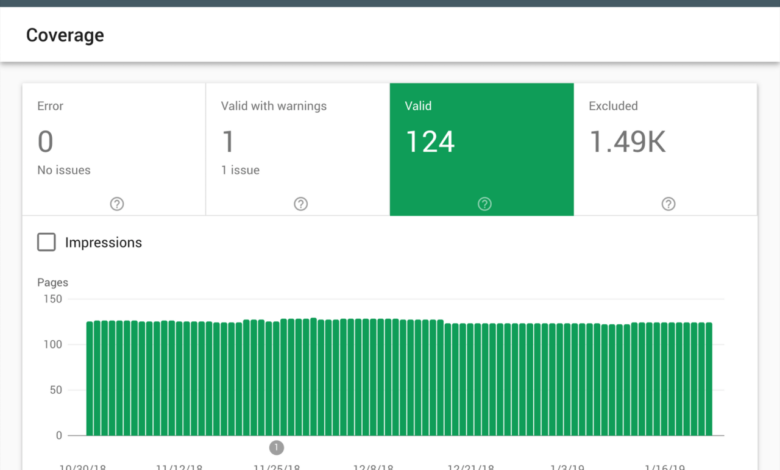

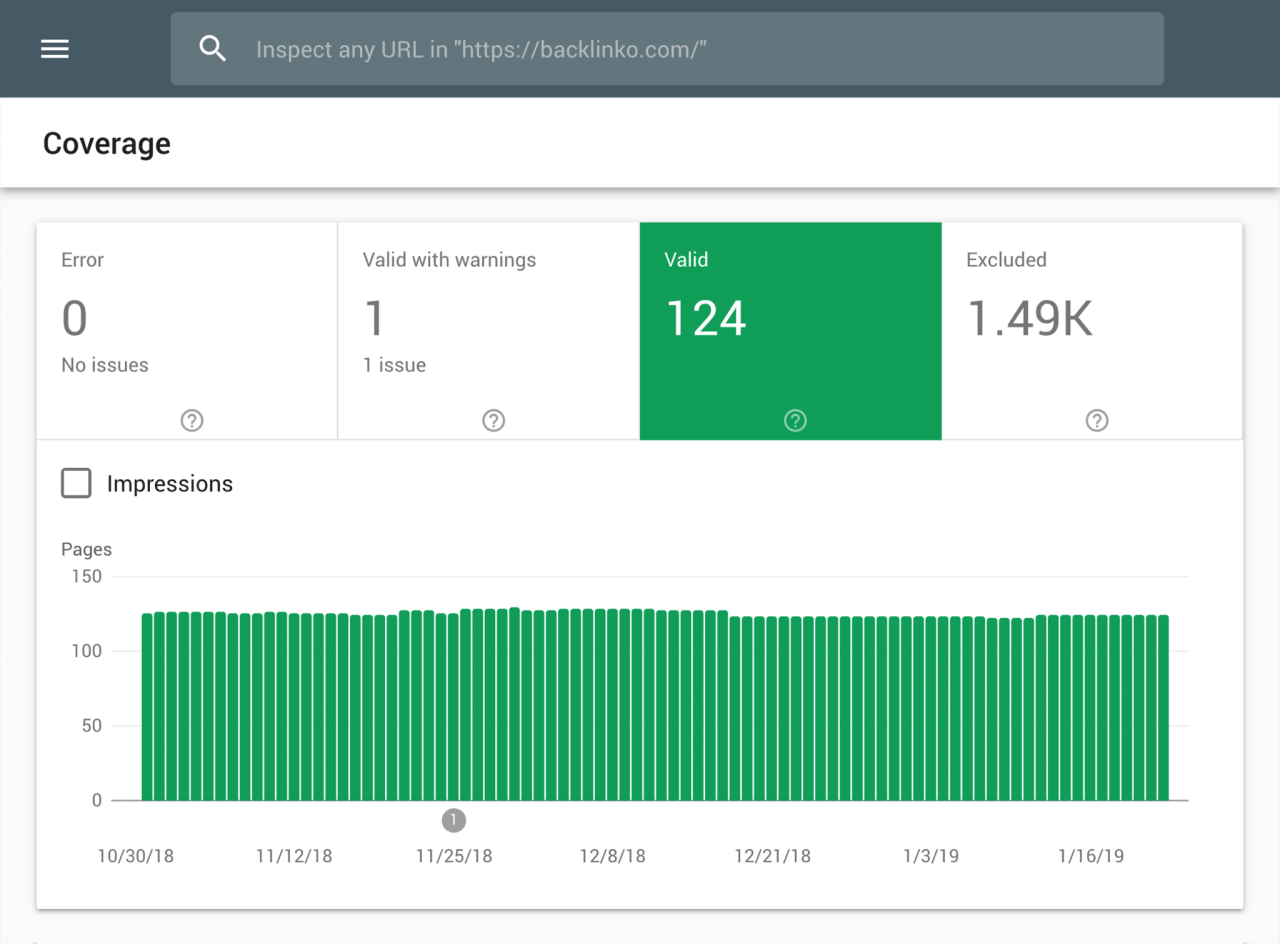

- Search Engine Crawling: Google Search Console can reveal duplicate content issues by identifying pages with similar content. Using this data, website owners can pinpoint areas requiring attention.

Strategies for Addressing Duplicate Content Issues

Several strategies can be implemented to address duplicate content problems. These strategies are tailored to the specific circumstances of the issue, ranging from simple rewrites to more complex canonicalization processes.

- Content Modification: Rewriting or modifying duplicate content to make it unique is often the most effective approach. This ensures that each page offers distinct and valuable information to users and search engines.

- Canonicalization: Using the canonical tag, website owners can specify which version of a page should be considered the authoritative one. This helps search engines understand the preferred version of the content.

- Redirection: Redirecting duplicate content to the canonical version is a simple way to avoid confusing search engines. This ensures that all links point to the preferred version.

Tools and Techniques for Duplicate Content Removal or Canonicalization

Various tools and techniques can be employed to remove or canonicalize duplicate content effectively. Choosing the right tool depends on the scale of the website and the resources available.

- Copyscape: A widely used tool for identifying duplicate content across the web. It compares content to billions of pages to pinpoint similarities. Copyscape provides detailed reports, including the source of the duplication.

- Siteliner: This tool offers comprehensive website analysis, including the identification of duplicate content. It helps pinpoint areas of concern and provides suggestions for improvement. Siteliner provides a free version with limited features and a paid version with advanced capabilities.

- Screaming Frog Spider: A powerful crawler that helps to identify and analyze various website issues, including duplicate content. It allows for in-depth analysis of the website’s structure, and can identify patterns and areas of concern. This is particularly helpful for larger websites.

Procedures for Fixing Duplicate Content Problems

A systematic procedure for fixing duplicate content problems is crucial for success. This involves careful analysis, identification, and implementation of solutions.

- Identify Duplicate Content: Use the appropriate tools and techniques (e.g., manual inspection, automated tools) to pinpoint duplicate content.

- Choose the Best Solution: Decide on the most appropriate solution based on the severity of the duplication and the website’s structure. (e.g., content modification, canonicalization, redirection).

- Implement the Solution: Carefully implement the chosen solution to avoid further problems. Ensure the changes are accurately reflected in the website’s code and structure.

- Test and Monitor: Thoroughly test the changes to ensure they have resolved the duplication issue and are not introducing new problems. Monitor the website’s performance and search engine rankings to assess the effectiveness of the solution.

Comparison of Duplicate Content Identification Tools

| Tool Name | Features | Pricing |

|---|---|---|

| Copyscape | Duplicate content detection, source identification, report generation | Various plans, starting from free trials |

| Siteliner | Comprehensive website analysis, duplicate content detection, audits | Free version with limited features, paid plans available |

| Screaming Frog Spider | Website crawling, duplicate content detection, technical audits | Paid software |

Case Studies of Duplicate Content Issues and Solutions

Duplicate content can severely damage a website’s search engine rankings. Understanding how different websites have addressed this issue and the impact of their solutions provides valuable insights for website owners and professionals. This section will delve into real-world examples, detailing the problems, the implemented solutions, and the resulting improvements.Duplicate content problems stem from various sources, including content mirroring, scraped content, and poorly implemented content management systems.

Effective solutions require a comprehensive understanding of the root cause and careful execution. These case studies demonstrate that identifying and addressing duplicate content issues is crucial for maintaining or improving search engine visibility.

Case Study 1: The “Mirror Site” Problem

A travel blog, “Wanderlust Adventures,” had inadvertently created duplicate content by mirroring its blog posts on a separate subdomain. This resulted in search engines indexing the same content from both URLs, diluting the blog’s authority and confusing search algorithms. The solution involved canonicalization. The blog implemented a 301 redirect from the duplicate URL to the primary URL. This instructed search engines to consider the primary URL as the authoritative version.

Google’s approach to duplicate content significantly impacts SEO. Understanding how search engines like Google handle duplicate content is crucial for a strong online presence. A comprehensive SEO website analysis, like the one offered by JarDigital, seo website analysis , can pinpoint duplicate content issues and offer strategies for improvement. Ultimately, minimizing duplicate content is key to maximizing your site’s search engine rankings.

This strategic redirection of traffic effectively consolidated link equity and prevented the dilution of efforts. The impact on search rankings was significant. Within three months, “Wanderlust Adventures” saw a noticeable increase in organic traffic and improved rankings for relevant searches, particularly those related to popular travel destinations. The blog’s search visibility grew steadily.

Case Study 2: The Content Scrape

A small e-commerce site, “Cozy Candles,” experienced a decline in organic traffic. Investigation revealed that competitors were scraping their product descriptions, creating near-identical listings on their own sites. This led to a significant drop in search rankings as search engines saw their site as less unique. The solution involved a robust content protection system. “Cozy Candles” implemented a no-index tag for pages that contained scraped content.

They also added unique product descriptions and high-quality images to their website. Furthermore, they actively engaged in content syndication by partnering with relevant bloggers, ensuring their unique content was published on multiple authoritative platforms. These measures successfully improved their site’s visibility. “Cozy Candles” saw a noticeable improvement in search rankings, leading to a consistent increase in organic traffic and sales.

Their unique selling points, high-quality descriptions, and engaging content resonated with both customers and search engines.

Case Study 3: The CMS Issue

A company website, “Tech Solutions,” used a poorly configured content management system. This resulted in multiple URLs pointing to the same content. This problem was particularly problematic, as it affected a large number of pages. The solution involved implementing a comprehensive canonicalization strategy. The team used the sitemap and robots.txt files to specify the canonical URLs for each page.

The team also employed a robust 301 redirect strategy. This streamlined the site architecture, eliminating duplicate content issues. The impact on search rankings was substantial. “Tech Solutions” experienced a significant increase in organic traffic and improved rankings across various s, leading to a noticeable uptick in customer inquiries and conversions.

Advanced Techniques for Avoiding Duplicate Content

Avoiding duplicate content is crucial for maintaining a strong presence. Simply removing identical content isn’t enough; sophisticated strategies are needed to tackle the complexities of modern web development, particularly when dynamic content generation is involved. This section explores advanced techniques for mitigating duplicate content issues, providing practical examples and highlighting their impact on search engine rankings.Dynamic content generation, while beneficial for user experience, often presents challenges regarding duplicate content.

The key is to identify and manage these instances effectively, ensuring that search engines can crawl and index the unique aspects of your website without encountering duplicates. By understanding and implementing advanced strategies like canonicalization and careful handling of dynamic parameters, you can maintain a clean and optimized website structure.

Dynamic Content Generation

Handling dynamic content requires careful planning to avoid creating duplicate content. Dynamic websites often generate pages based on user input or database queries, which can lead to identical content for different URLs. Strategies for addressing this issue include using server-side includes (SSI), parameterized URLs, and employing template engines.

- Server-Side Includes (SSI): SSI allows for dynamic content insertion within HTML templates. This method helps generate unique pages without duplicating entire content blocks. For example, if you have a news section, SSI can dynamically insert the latest news articles into a template without recreating the entire page for each article.

- Parameterized URLs: Using parameters in URLs can help distinguish similar content. For example, a product page can include parameters for different product variations (size, color, etc.), creating unique URLs for each variation, rather than creating multiple identical pages.

- Template Engines: These tools allow for dynamic content generation within a pre-defined template structure. By using variables and placeholders, the engine inserts data into the template to create unique pages, without generating duplicate content.

Canonicalization

Canonicalization is a vital technique for managing duplicate content issues. It allows you to specify the preferred URL for similar or identical content to search engines. Using the canonical tag in the HTML head section directs search engines to the preferred version. This is particularly useful when content appears on multiple URLs (e.g., on different versions of a website).

- Implementation: The canonical tag is placed in the ` ` section of the HTML document and includes the URL of the preferred version. For example: ``

- Impact: Using canonical tags helps search engines understand the intended preferred URL, preventing them from indexing duplicate content and associating ranking signals with the incorrect page.

- Example: Imagine a product page that appears on both www.example.com and m.example.com. Using a canonical tag on the mobile version, pointing to the desktop version, ensures search engines index the desktop page and associate the rankings with the more comprehensive page.

Robots.txt and Meta Tags

Robots.txt and meta tags play a supporting role in duplicate content management. Robots.txt allows you to instruct search engine crawlers which pages to avoid indexing, preventing them from crawling potentially duplicate content. Meta tags, like the `noindex` tag, can prevent specific pages from being indexed by search engines.

- Robots.txt: This file tells search engine crawlers which pages or directories they should not crawl. This is particularly useful when dealing with dynamically generated content you don’t want indexed.

- Meta Tags: Meta tags, such as the `noindex` tag, can prevent search engines from indexing specific pages entirely, preventing duplicate content issues from affecting .

Final Review

In conclusion, managing duplicate content is vital for website success. By understanding Google’s approach, implementing preventative measures, and addressing existing issues, you can optimize your site for better search rankings. The strategies presented here offer a comprehensive guide to navigating the complexities of duplicate content and ensuring your website is indexed accurately and favorably by search engines.