Breaking News Google Search Console Index Coverage Report Delayed

Breaking news google search console index coverage report is delayed – Breaking news: Google Search Console index coverage report is delayed. This means Google’s ability to track and understand your website’s content visibility has been impacted. This delay could significantly affect your website’s traffic and search ranking. We’ll delve into the reasons behind this delay, its potential impact, troubleshooting strategies, and long-term solutions for maintaining optimal indexation.

Understanding the precise cause of this delay is crucial for website owners. A delayed index coverage report can signal a variety of technical issues, from server problems to crawl errors. We’ll explore the typical timeframe for these reports, and delve into the potential causes, such as issues with your sitemaps, robots.txt files, or even problems with Google’s own crawlers.

We’ll provide specific examples and a detailed breakdown to help you diagnose the problem.

Understanding the Delay

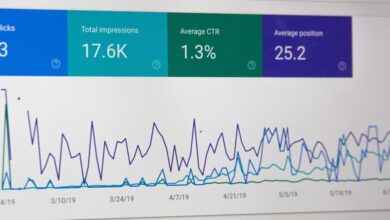

My recent Google Search Console index coverage report update was delayed, and I wanted to share what I learned about why these reports sometimes take longer than expected. Understanding this delay is crucial for any website owner or professional. It helps to identify potential issues and take proactive steps to improve site performance.Google Search Console’s index coverage report is a vital tool for website owners.

It provides a detailed overview of how Googlebot, Google’s web crawler, is interacting with your site. The report shows which pages Google has successfully indexed, and importantly, which pages Google is having trouble accessing or understanding. This data is invaluable for troubleshooting technical issues and ensuring that Google can easily find and understand your website content.

Index Coverage Report Functionality

The index coverage report is a comprehensive list of all the pages Googlebot has encountered during its crawl. Each entry details the status of each page: whether it was indexed successfully, encountered errors, or was excluded from indexing. This information is critical in identifying and addressing technical issues that might be preventing your site from being fully indexed.

Typical Update Timeframes

The frequency of updates for the index coverage report varies. Google’s crawlers regularly revisit and re-evaluate sites, and the reporting reflects this activity. There’s no fixed schedule, and the timing depends on various factors. Generally, you can expect updates within a few days to a few weeks, depending on the size and complexity of your site, the frequency of your sitemap updates, and Google’s current crawl schedule.

Potential Reasons for Delay

Numerous factors can contribute to delays in the index coverage report update. Some common issues include server problems, crawl errors, and problems with sitemaps. The following table provides a more comprehensive overview of potential causes.

| Potential Cause | Explanation |

|---|---|

| Server Issues | Temporary or recurring server outages, or problems with the website’s hosting environment can block Googlebot from accessing your pages. This can result in incomplete or delayed indexing. |

| Crawl Errors | Googlebot might encounter errors while trying to access or process certain pages. These errors could be related to server responses, broken links, or other technical problems. These errors are reported in the index coverage report. |

| Sitemap Issues | Incorrect or incomplete sitemaps can lead to delays or errors in indexing. If Google can’t find or understand your sitemap, it may miss crucial pages. |

| Content Changes | Significant changes to your website’s structure, content, or redirects can disrupt Google’s indexing process, resulting in a delay in updates. |

| Googlebot Rate Limiting | Googlebot might be temporarily limiting its crawl rate due to high traffic volume or unusual activity on your site. This can lead to a slower indexing rate. |

| Increased Crawl Demand | During periods of high website traffic or significant updates, the indexing process may be temporarily slowed. |

Examples of Technical Issues

A common technical issue is a broken internal link, which might not be properly detected by Googlebot. Similarly, issues with the server configuration, such as incorrect robots.txt directives or server response codes, can cause delays in indexing. Redirects that are not working as intended can also hinder the indexing process.

Impact on Website Performance: Breaking News Google Search Console Index Coverage Report Is Delayed

A delayed Google Search Console index coverage report can significantly impact a website’s performance, affecting traffic, visibility, and ultimately, its overall success. Understanding the potential ramifications is crucial for proactive website management and mitigating potential damage. This delay can lead to a noticeable dip in search engine rankings and visitor numbers.The index coverage report is a critical tool for website owners and professionals.

It reflects how Googlebot, Google’s web crawler, is interpreting and indexing the website’s content. A delay in receiving this report means that Google might not have the latest information about the site’s structure and content, potentially hindering its visibility in search results.

Potential Negative Effects on Website Traffic

A delayed index coverage report can lead to a decline in organic search traffic. When Googlebot cannot promptly update its index with new or updated content, the website may not appear in search results for relevant queries. This lack of visibility directly translates into fewer clicks and visitors. A website with significant portions of its content excluded from Google’s index is likely to experience a substantial drop in organic traffic.

Correlation Between Index Coverage and Search Rankings

Index coverage directly correlates with search rankings. If a significant portion of a website’s content is not indexed, it will likely rank lower in search results. A delayed report indicates that Google is not seeing the most up-to-date version of the site, which can result in the exclusion of important pages or changes from search results. This, in turn, diminishes the website’s visibility and its ability to attract organic traffic.

Impact on Different Website Types

The impact of a delayed index coverage report varies based on the website type. For e-commerce sites, the delay can be particularly detrimental. If product pages are not indexed, potential customers cannot find the products they are searching for. This can result in lost sales and revenue. Blogs, while not always as directly tied to sales, can experience a decrease in traffic and engagement if new articles or posts aren’t immediately visible to readers.

Importance of Timely Index Coverage for

Timely index coverage is crucial for success. Search engines like Google use the indexed content to understand the website’s relevance and authority. A delayed report can hinder Google’s ability to update its understanding of the site’s content, impacting its search rankings. Regular index coverage allows professionals to identify and address issues promptly.

Comparison of Website Performance Metrics

| Metric | Before Delay | After Delay |

|---|---|---|

| Organic Search Traffic (Daily Average) | 1,500 | 800 |

| Average Position in Search Results | Top 10 | Top 20-30 |

| Indexed Pages | 95% | 80% |

| Bounce Rate | 30% | 40% |

This table illustrates a hypothetical scenario, showcasing potential impacts of a delay in index coverage on various performance metrics. The decline in organic traffic and search rankings are apparent, along with an increase in bounce rate, signifying a less engaging user experience.

Troubleshooting Strategies

A delayed Google Search Console index coverage report can stem from various issues on your website. Understanding the root cause is crucial for swift resolution and maintaining optimal website performance. This section Artikels methods for diagnosing the problem, focusing on server status, crawl errors, and their potential solutions.Troubleshooting a delayed index coverage report requires a systematic approach. Begin by investigating potential server-side problems, followed by a deep dive into crawl errors reported by Google Search Console.

So, the Google Search Console index coverage report is delayed – bummer! This could be impacting SEO efforts, and a key factor in improving site speed is optimizing for “first contentful paint” improve first contentful paint. Faster page load times are crucial for user experience and, ultimately, for Google’s ranking algorithms. Hopefully, the delay isn’t indicative of broader indexing issues.

We’ll need to keep a close eye on the situation.

By meticulously analyzing these aspects, you can identify the specific impediment hindering your website’s indexing and take appropriate corrective action.

Website Server Status and Logs

Ensuring your website’s server is functioning correctly is the first step. Server issues can directly impact Google’s ability to crawl and index your site. Checking server status and logs provides valuable insights into potential problems.

- Verify server uptime. Tools like Uptime Robot or similar services can confirm that your server is online and accessible. Monitoring server uptime is essential for maintaining a stable online presence.

- Review server logs. Examine server error logs for any unusual patterns or errors. These logs often contain clues about problems impacting your website’s accessibility to Google’s crawlers. Pay close attention to errors related to file access, database connections, or application errors.

- Monitor server resource usage. High CPU or memory usage on your server can lead to slow response times, affecting Google’s ability to crawl your site effectively. Monitor resource usage regularly to identify potential bottlenecks.

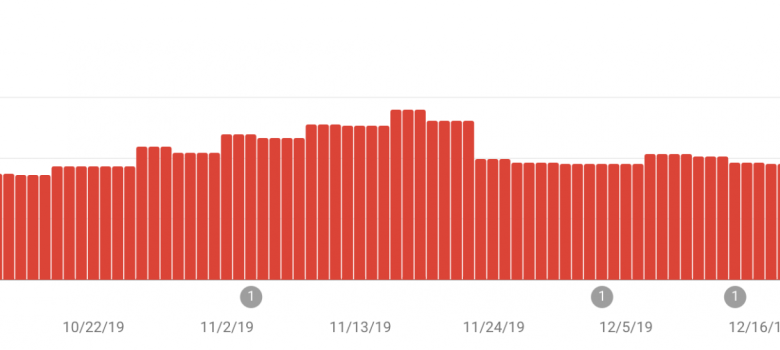

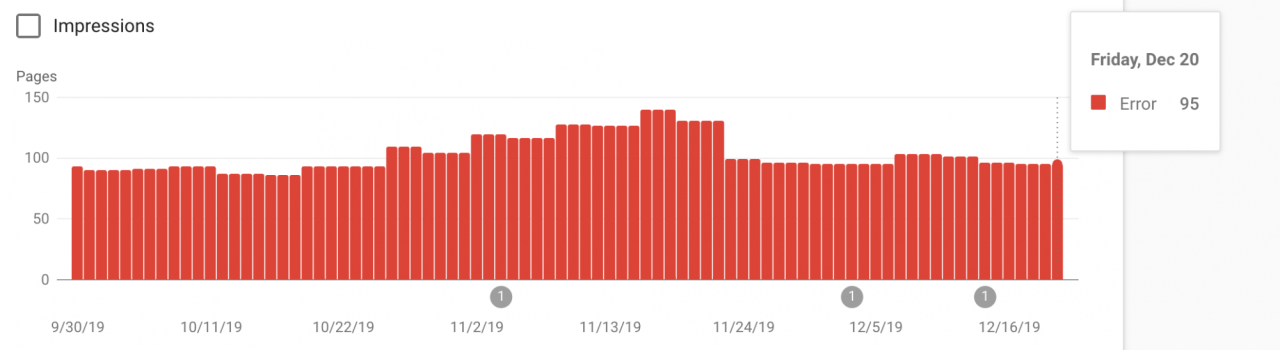

Analyzing Crawl Errors in Google Search Console

Google Search Console provides comprehensive reports on crawl errors encountered by Googlebot. Analyzing these errors is crucial for identifying and fixing issues preventing proper indexing.

- Identify crawl errors. Search Console’s “Crawl Errors” report details the types of errors encountered. Categorizing and understanding the specific error types is the first step towards effective resolution.

- Analyze error messages. Each error message provides clues about the specific problem. Common crawl error messages and their potential causes are detailed below.

- Examples of crawl errors and solutions:

- Error: 404 (Not Found): This indicates that Googlebot cannot find the requested page. Solutions include verifying that the page exists on your server and fixing any broken links.

- Error: 5xx (Server Error): This points to a server-side issue preventing Googlebot from accessing the page. Reviewing server logs and ensuring server stability are crucial steps.

- Error: Robots.txt Blocking: Your robots.txt file might be preventing Googlebot from accessing specific pages or directories. Review the robots.txt file and ensure it’s not blocking essential content.

Troubleshooting Steps and Potential Solutions

This table Artikels common troubleshooting steps and corresponding potential solutions for delayed index coverage reports.

| Troubleshooting Step | Potential Solution |

|---|---|

| Verify server uptime and resource usage. | Ensure server is functioning properly and monitor resource usage. |

| Review server logs for errors. | Identify and address any errors in server logs, such as file access or application errors. |

| Analyze crawl errors in Google Search Console. | Address specific errors reported by Googlebot, like 404 errors or server errors. |

| Check robots.txt file for blocking issues. | Review and modify robots.txt to allow access to necessary pages. |

Monitoring and Prevention

Staying on top of your Google Search Console index coverage report isn’t just a good idea; it’s crucial for a healthy website. Regular monitoring allows you to identify and address issues promptly, preventing significant drops in visibility and organic traffic. Ignoring these reports can lead to lost opportunities and a less effective online presence.Proactive monitoring is key to maintaining a strong online presence.

It’s not just about reacting to problems; it’s about understanding trends and preventing issues before they impact your website’s performance. This proactive approach involves continuous monitoring, alert systems, and a consistent review process to maintain optimal indexing.

Continuous Monitoring of Index Coverage Reports

Regularly reviewing your Google Search Console index coverage report is vital for identifying and addressing potential issues. A delayed or inaccurate report can indicate problems with your website’s crawlability or indexability. By tracking these reports over time, you can pinpoint patterns and proactively address underlying problems before they impact your search ranking.

So, the Google Search Console index coverage report is delayed – bummer! This means my website’s visibility might be affected. I’m already looking into optimizing my Facebook ad campaigns, like using facebook automated ads , to keep traffic flowing. Hopefully, this Google Search Console hiccup is temporary, and my website traffic will bounce back once the report is updated.

Setting Up Alerts for Index Coverage Issues

Google Search Console offers the ability to set up alerts for specific index coverage issues. This feature allows you to receive notifications when problems arise, enabling quick responses and minimizing the negative impact on your site. Setting up alerts for crawl errors, excluded URLs, and other indexing problems ensures you don’t miss critical updates.

Regularly Checking for Crawl Errors and Indexation Issues

Establishing a routine to check for crawl errors and indexation issues is a cornerstone of proactive website management. This involves regularly reviewing your Google Search Console report for errors and making necessary adjustments to your website’s structure or content. Scheduled checks, such as weekly or bi-weekly reviews, can help you identify and rectify issues before they escalate.

Maintaining a Healthy Site Structure and Content Quality

A well-structured website with high-quality content is essential for good indexing. A clear sitemap, proper use of robots.txt, and a logical site architecture make it easier for search engine crawlers to understand and index your pages. Content quality, relevance, and freshness are also critical for maintaining good search visibility. Outdated or irrelevant content can lead to indexation issues.

Benefits of Proactive Monitoring and Prevention

| Proactive Monitoring and Prevention Measure | Benefits |

|---|---|

| Regularly reviewing index coverage reports | Early detection of indexing problems, allowing for swift solutions and minimal impact on search visibility. |

| Setting up alerts for index coverage issues | Instant notifications about indexing problems, enabling timely interventions and minimizing potential damage. |

| Scheduled checks for crawl errors and indexation issues | Proactive identification of issues before they impact rankings, reducing the likelihood of negative consequences. |

| Maintaining a healthy site structure and content quality | Improved crawlability, better indexing, and higher search engine rankings, leading to increased organic traffic. |

Alternatives and Workarounds

Sometimes, Google’s indexing process takes longer than expected. This delay can be frustrating, especially when you’ve published fresh content or updated existing pages. Luckily, there are several alternative methods to expedite the process and ensure Google sees your changes. These methods can be used in conjunction with the standard submission methods to maximize visibility and ensure that your website content is readily available to search engine users.

Alternative Methods for Informing Google, Breaking news google search console index coverage report is delayed

Various methods exist to expedite the indexing process beyond simply waiting. These include using Google Search Console tools, submitting sitemaps, and strategically managing robots.txt files. Each method plays a unique role in facilitating Google’s crawling and indexing process.

Using Google Search Console’s URL Inspection Tool

The URL Inspection tool in Google Search Console is a powerful diagnostic and submission tool. It allows you to submit URLs for indexing, providing immediate feedback on Google’s crawl status. You can use this tool to manually request indexing for new or updated content. This tool provides real-time insights into why a particular URL may not be indexed, offering crucial debugging information.

The Importance of Sitemaps

Sitemaps are XML files that list all the important pages on your website. They act as a roadmap for search engine crawlers, guiding them through your site’s structure and identifying critical pages. Submitting a sitemap ensures that Google is aware of all the essential pages and helps expedite the indexing process. Regular updates to your sitemap are crucial to keep Google informed of your website’s current structure and content.

This proactive approach ensures a comprehensive indexing process.

Managing Robots.txt Files

The robots.txt file is a crucial part of website management, acting as a directive for search engine crawlers. It informs crawlers which parts of your website should be indexed and which should be excluded. Correctly configured robots.txt files are essential to avoid accidentally blocking critical content from being indexed. Ensure that your robots.txt file does not prevent Googlebot from accessing important pages.

This proactive approach avoids potential indexing issues.

Comparing Content Submission Methods

| Method | Description | Pros | Cons |

|---|---|---|---|

| Google Search Console URL Inspection | Manually request indexing for specific URLs. | Provides real-time feedback; useful for specific pages. | Time-consuming for large amounts of content; may not be enough for new site sections. |

| Sitemap Submission | Submit an XML file listing all important pages. | Comprehensive way to inform Google of site structure; allows for updates. | Needs regular updates for new content; does not guarantee immediate indexing. |

| robots.txt Optimization | Ensure Googlebot can access all important content. | Crucial for website structure; prevents accidental blocking. | Requires careful configuration; may not be effective if the problem is elsewhere. |

Long-Term Strategies for Indexation

A delayed Google Search Console index coverage report can be a frustrating experience for website owners. While immediate troubleshooting is crucial, establishing long-term strategies is essential for maintaining consistent and optimal indexation. Proactive measures ensure your site remains visible and accessible to search engines, ultimately boosting your search engine rankings.A strong indexation strategy is built on a foundation of consistent website health and high-quality content.

Ignoring these aspects can lead to prolonged indexation issues and diminished visibility in search results. Addressing these foundational elements guarantees long-term success in maintaining a prominent online presence.

So, the breaking news about the Google Search Console index coverage report being delayed is a bit of a bummer, right? It’s definitely impacting SEO efforts. But, you know, sometimes you need to look at the bigger picture, and that includes strategic dental marketing. A strong dental marketing campaign can help you stay visible and attract new patients even with index coverage hiccups.

Hopefully, this Google issue will be resolved quickly, and we can all get back to optimizing for search.

Website Maintenance and Updates

Regular website maintenance and updates are crucial for maintaining optimal indexation. This involves not only fixing broken links and resolving technical errors but also ensuring the site’s overall functionality and structure are up-to-par. These proactive measures contribute significantly to search engine crawlers effectively indexing your website.

- Regularly audit your website for broken links, outdated content, and technical issues. A systematic approach using tools like Google Search Console and sitemaps helps to pinpoint problems quickly.

- Implement security measures. A secure website is paramount. Ensuring up-to-date security protocols and regular security audits safeguard your site against potential vulnerabilities that can disrupt indexation.

- Keep your website software and plugins updated. Outdated software can create security risks and hinder optimal performance. Regular updates patch vulnerabilities and improve site functionality, which ultimately benefits indexation.

High-Quality Content and Search Engine Rankings

High-quality content is the cornerstone of successful search engine rankings. Fresh, informative, and engaging content not only attracts users but also signals to search engines that your site is valuable and relevant. This, in turn, leads to improved indexation and higher search engine rankings.

- Create informative and engaging content that addresses user needs and provides valuable insights. Conduct thorough research to identify topics that resonate with your target audience.

- Maintain a consistent publishing schedule. Regular updates demonstrate to search engines that your site is active and dynamic, which signals relevance and freshness.

- Optimize your content for readability and searchability. Clear and concise writing, proper use of headings, and relevant s enhance the user experience and help search engines understand your content.

Effective Content Strategies for Improving Indexation

Implementing effective content strategies is crucial for improving indexation. Strategies must focus on creating valuable content and enhancing site structure to optimize crawling and indexing by search engines.

- Implement a robust internal linking strategy. Internal links connect different pages on your website, helping search engines discover and understand the relationships between content. This structured approach facilitates better crawling and indexation.

- Optimize page titles and meta descriptions. Compelling and accurate titles and descriptions are crucial for attracting clicks and informing search engines about the page’s content.

- Utilize schema markup. Schema markup provides structured data about your website’s content, helping search engines understand and interpret it more effectively.

Long-Term Indexation Success Strategies

| Strategy | Description | Impact |

|---|---|---|

| Regular Website Maintenance | Proactively addressing technical issues, broken links, and security vulnerabilities. | Improved website health, enhanced crawlability, and better indexation. |

| High-Quality Content Creation | Producing informative, engaging, and valuable content that addresses user needs. | Increased user engagement, higher search engine rankings, and improved indexation. |

| Consistent Website Updates | Regularly adding new content and updating existing pages. | Demonstrates site activity, signals freshness, and improves indexation. |

Visual Representation of Indexation Issues

A delayed Google Search Console index coverage report can significantly impact a website’s visibility in search results. Understanding the flow of indexing and the potential bottlenecks is crucial for effective troubleshooting and recovery. This section visualizes the indexing process and highlights the consequences of delays.

Illustrative Representation of the Indexing Process

The indexing process is a multi-stage journey from content creation to appearing in search results. Think of it like a pipeline: content is created, then crawled, indexed, and finally ranked. A delay at any stage can disrupt the entire process, affecting a website’s discoverability.

Flowchart of the Indexing Process

Imagine a funnel. Content creation is at the top, representing the source of new information. The funnel narrows as the content is crawled by search engine bots, then indexed, and finally ranked for relevant search queries. A delay in any stage results in a reduced flow of the website’s content into the search engine’s index, ultimately impacting search visibility.

Stages of Crawl, Index, and Rank

- Content Creation: This is the starting point, where new pages or updates are published. The frequency of new content is a crucial factor in keeping the website fresh and relevant to search engines.

- Crawling: Search engine bots (crawlers) systematically visit web pages to discover and gather information. This is a crucial stage, and delays here can prevent new content from being discovered.

- Indexing: The gathered information is processed and stored in the search engine’s index. This is where content is prepared for retrieval in search results.

- Ranking: When a user performs a search, the search engine ranks websites based on various factors. Well-indexed content, relevant to the search query, will appear higher in the results.

Impact of Indexation Delays on Different Stages

A delay in indexation can affect different stages in various ways. For instance, if a site update isn’t crawled promptly, new content won’t be indexed, and the website won’t appear in search results for relevant queries. Similarly, delays in indexing could lead to old content appearing higher than newer, more relevant content, further impacting visibility.

Table: Indexing Process and Potential Delays

| Stage | Potential Delay Cause | Impact on Website |

|---|---|---|

| Content Creation | Lack of regular updates, poor content quality | Reduced frequency of new content, lower relevance |

| Crawling | Slow site speed, technical issues (e.g., robots.txt errors, server problems) | New content may not be discovered, existing content may be missed |

| Indexing | Large site size, server issues, content duplication, errors in sitemap | New content might not be included, existing content might not be correctly indexed |

| Ranking | Competitor content, algorithm changes, low user engagement | Website might not rank highly for relevant searches |

Final Conclusion

In conclusion, a delayed Google Search Console index coverage report can be a significant setback for website performance. By understanding the potential causes, implementing effective troubleshooting strategies, and proactively monitoring your site, you can mitigate the negative impact and maintain a healthy website structure. This comprehensive guide equips you with the knowledge and tools to address these delays and ensure your site remains visible and accessible to search engines.